The first results when searching for “Artificial Intelligence in video games” in Google speak for themselves: Artificial Intelligence (AI) in video games is often unsatisfactory. As a gamer, it is moreover frequent to be confronted with situations that lose credibility because of the AI behavior. Is this a real problem? Why does AI seem to stagnate in video games while the rest (graphics, complexity, gameplay…..) is continuously improving? While more and more IAs are able to compete with humans on board games (chess, Go) but also more recently on strategy (Dota 2), why do they seem to tirelessly get stuck in the background or repeat the same tirades when we play? This is what we will try to study in this complete dossier.

State of the art and history

Artificial intelligence and board games such as chess or Go have always been linked since the emergence of the discipline. Indeed, they provide a fertile ground to evaluate the different AI methods and algorithms developed. When the first video games appeared, AI research was in full swing. It is therefore logical that interest has been focused on this new medium. However and for a long time, research has mainly led to the improvement of video games and not vice versa.

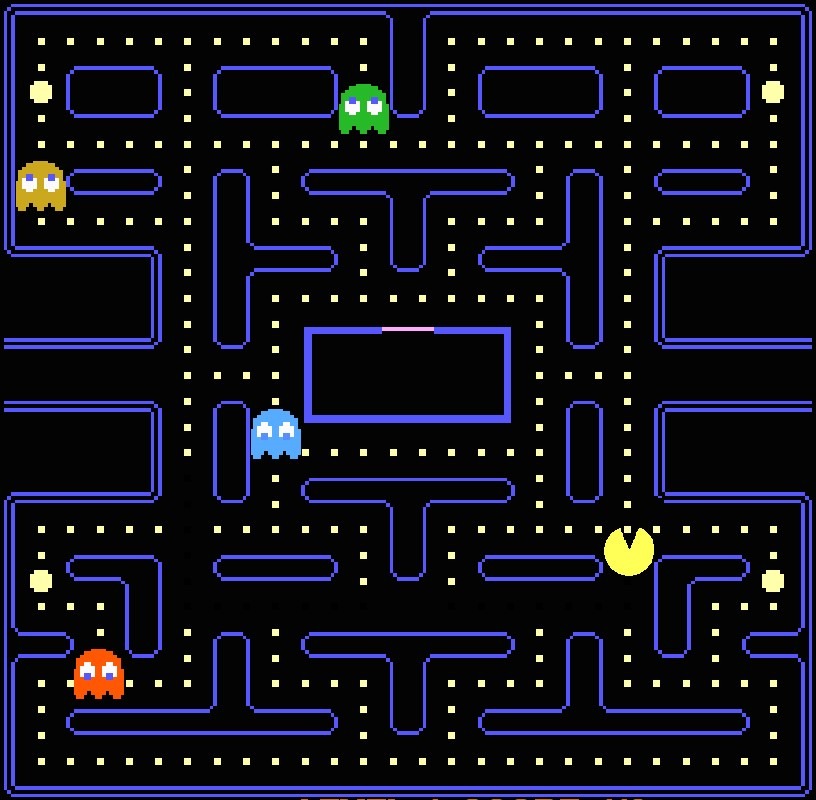

The main goal of AI in a video game is to make the world portrayed by the game as coherent and humane as possible in order to enhance the player’s enjoyment and immersion. This goal of recreating a world as close to reality can be achieved through many different approaches such as improving the behavior of non-player characters (NPCs) or generating content automatically. Like board games, early video games often featured a confrontation between two players (such as Pong in 1972). Since two players were playing against each other, no AI was required in this type of game. However, games quickly became more complex and many elements began to exhibit behaviors independent of the player’s actions making the game more interactive, such as ghosts in Pac Man.

So it quickly becomes necessary to implement AI in video games to be able to generate such behaviors. Since then, AI and video games are irremediably linked, as AI has become more and more important in game systems.

Now, whatever the type of video games (racing games, strategy games, role-playing games, platform games, etc …), there is a good chance that many elements of the game are managed by AI algorithms. However, this strong overlap between AI and game system has not always been smooth and, even today, some integrations are deficient.

The links between AI and video games

At the beginning of the field, there was for a long time a gap between classical AI research and AI actually implemented by developers in video games. Indeed, there were major differences between the two in the knowledge, the problems encountered but also the ways to solve these problems. The main reason behind these differences probably comes from the fact that these two approaches to AI do not really have the same objectives.

Classical AI research generally aspires to improve or create new algorithms to advance the state of the art. On the other hand, the development of an AI in a video game aims to create a coherent system that integrates as well as possible into the game design in order to be fun for the player. Thus, a high-performance AI that is not well integrated into the gameplay may serve the game more than it will improve it.

Developing an AI for a video game therefore often requires to find engineering solutions to problems that are little or not at all addressed by classical AI research. For example, an AI algorithm in a game is highly constrained in terms of computing power, memory and execution time. Indeed, the game must run through a console or an “ordinary” computer and it must not be slowed down by AI. This is why some state of the art resource-intensive AI solutions could only be implemented in video games several years after their use in traditional AI.

Possible solutions to solve a given problem are also a distinction between the two fields. For example, it is often possible to get around a difficult AI problem by slightly modifying the game design. Moreover, in a video game, an AI can afford to cheat (by having access to information it is not supposed to have, or by having more possibilities than the player for example) in order to compensate for its lack of performance. Cheating an AI in itself is not a problem as long as it improves the player experience. However, in general, it is difficult to disguise the fact that an AI cheats and this can lead to frustration for the player.

It can be noted that most of the major video game concepts (racing games, platform games, strategy games, shooting games, etc…) were created around the 80s and 90s. At that time, the development of a complex AI in a game was something difficult to achieve given the existing resources and methods. Game designers of the time therefore often had to deal with the lack of AI by making appropriate choices of Game Design. Some games were even thought to not need AI. These basic designs have been inherited by the different generations of video games, many of which remain fairly faithful to the genre’s working guns. This may also explain why AI systems in video games are generally quite basic when compared to traditional research approaches.

Thus, the methods that we will present may surprise by their relative simplicity. Indeed, when we hear about AI today, it refers to Deep Learning and thus to very complex and abstract neural networks. However, Deep Learning is only a sub-domain of AI, and symbolic methods have been the commonly used approaches for a long time. These approaches are now referred to as GOFAI (“Good Old-Fashioned Artificial Intelligence” or “bonne vieille AI démodée” in French). As its nickname indicates, symbolic AI is clearly no longer the most popular approach, but it can nevertheless solve many problems, especially in the context of video games.

AI for video games

The main criterion of success of an AI in a classic game is probably its level of integration and nesting in the game design. For example, NPCs with unjustifiable behavior can break the intended game experience and thus the player’s immersion.

One of the most classic examples in old games is when Nonplayer Characters (NPCs) get stuck in the background. Conversely, an AI that fully participates in the game experience will necessarily have a positive impact on the player’s experience, partly because players are more or less used to the sometimes incoherent behavior of AI.

Depending on the type of game, an AI can have a wide variety of tasks to solve. So we will not look at all the possible uses of an AI in a game but rather the main ones. These will include the management of the behavior of NPCs (allies or enemies) in shooting games or role-playing games, as well as the higher level management of an agent who must play a strategy game.

NPC's control

There are many approaches to developing realistic behaviors in a video game. However, realism alone is not enough to make a game fun. Indeed, it should not be forgotten that the goal of an enemy in a game is generally to be eliminated by the player. A game with overly realistic opponents will not necessarily be fun to play. For example, NPCs who spend their time running away may annoy the player more than anything else. So there is a compromise to be found in the behavior of NPCs that is not necessarily easy to throw away.

In this part, we will take a look at the main solutions to try to solve this problem. But first, let’s review some important notions.

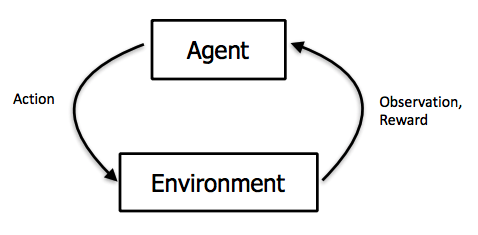

Agents

Probably the first notion when we talk about Artificial Intelligence for video games, an agent is an entity that can obtain information about its environment and make decisions based on this information in order to achieve an objective. In a video game, an agent often represents an NPC, but it can also represent a more complete system such as an opponent in a strategy game. Therefore, there can be several levels of agents communicating with each other in the same game.

States

A state is a unique configuration of the environment in which an agent is located. The state can change when an agent (or the player) performs an action. For an agent, the set of possible states is called the state space. This is an important notion since the basic idea of most AI methods is to browse or explore the state space to find the best way to act according to the current situation. The characteristics of the state space thus impact the nature of the AI methods that can be used.

For example, if we consider an agent that defines the behavior of an NPC, the state space can be defined in a simple way: it is the set of situations in which the NPC can find itself. You can set up a state where the NPC does not see the player, a state where the NPC sees the player, a state when the player shoots at the NPC and a state when the NPC is injured. By considering all possible situations for the NPC, we can determine the actions that the NPC can take and the consequences of these actions in terms of changing the state (the space of states is easily browsed). We can therefore fully define the agent’s behavior and choose the most relevant actions at each moment according to what is happening.

Conversely, in the case of an agent playing a game like chess, the state space will be all the possible configurations of the pieces on the game board. This represents an extremely large number of situations (10^47 states for chess). Under these conditions, it is no longer possible to explore all the possibilities. Consequently, we can no longer determine the consequences of the agent’s actions and thus judge the relevance of his actions. One is therefore forced to forget about manual approaches and turn to other more complex methods (which will be discussed later).

Pathfinding

Before being able to model the behavior of an NPC by exploring the state space, it is necessary to define how the NPC can interact with its environment and, in particular, how it can move around in the game world. Pathfinding, which is the act of finding the shortest path between point A and point B, is therefore often one of the building blocks of a complex AI system. If this brick fails, the whole system will suffer. Indeed, NPCs with a faulty pathfinding may get stuck in the scenery and thus negatively impact the immersion of the player.

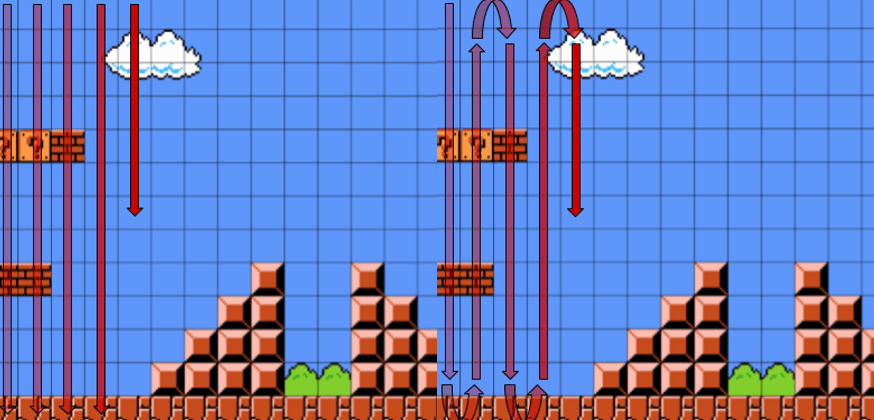

The most used algorithm for pathfinding is the A* algorithm. It is a faster version of an older algorithm, the Dijkstra algorithm. Both are so-called graph pathfinding algorithms. To use these algorithms in a video game, the playing field (which takes into account possible obstacles and hidden parts) is modeled on a 2-dimensional grid (a grid is a form of graph). The algorithm will run in this 2D grid.

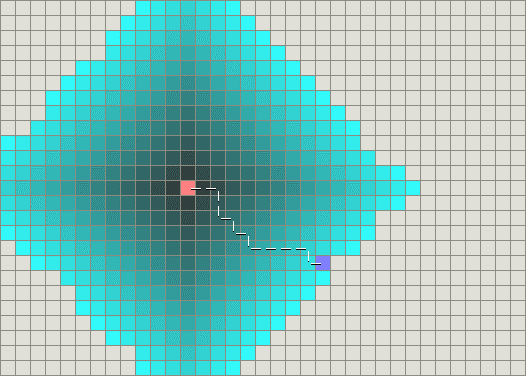

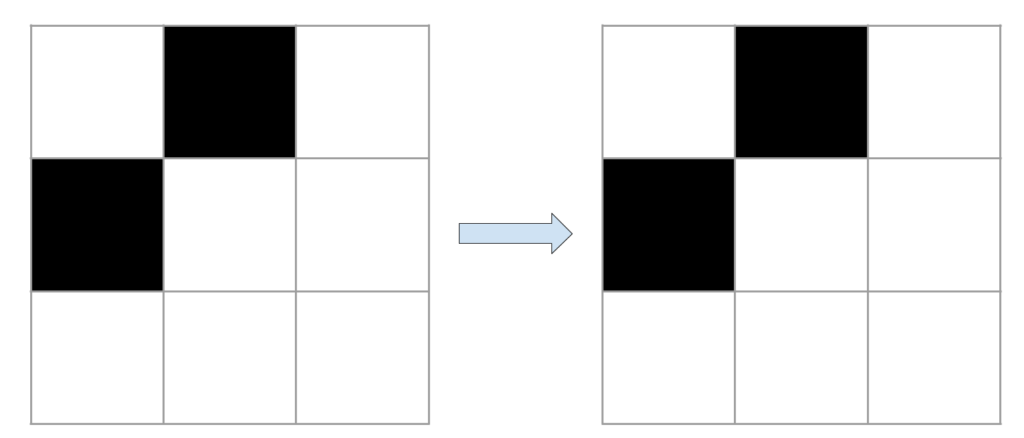

Dijkstra’s principle is relatively simple, he will go through each grid cell in order according to its distance from the starting cell until he finds the destination cell.

We can see with the diagram above that the algorithm has gone through all the blue boxes before finding the purple destination box. This algorithm will always find the best path but it is slow because it goes through a large number of boxes.

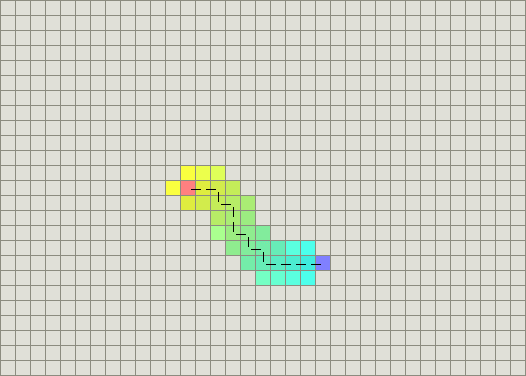

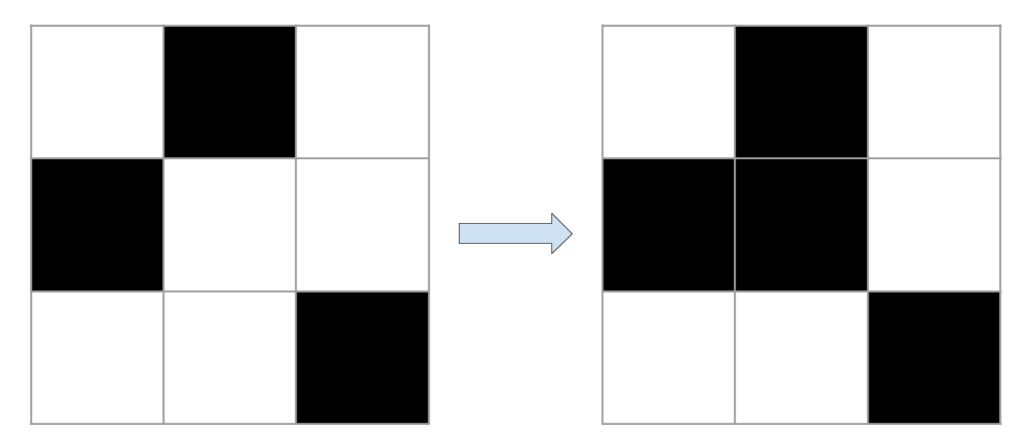

Where Dijkstra always finds the optimal solution, A* may only find an approximate solution. However, he will do it much faster, which is a critical point in a video game. In addition to having the distance information between each square with the starting square, the algorithm calculates approximately the general direction in which he has to go to get closer to the destination. It will then try to run through the graph, always getting closer to the goal, which makes it possible to find a solution much faster.

In more complex cases where the algorithm encounters an obstacle, it will start from the beginning to find another path that gets closer to the destination but avoids the obstacle.

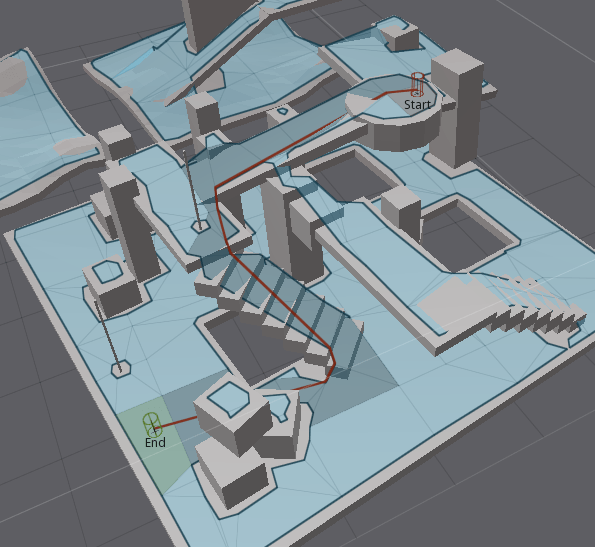

For more recent 3D games, the world is not represented by a grid but by a 3D equivalent called a navigation mesh. A navigation mesh is a simplification of the world that considers only the zones in which it is possible to move in order to facilitate the work of the pathfinding algorithm. The principle of pathfinding remains the same.

These graph pathfinding algorithms may seem simple and one may wonder if they are really AI. It is important to know that a good part of AI can finally be reduced to search algorithms. This feeling that a problem solved by AI was finally not so complicated is called the AI effect.

But when it comes to pathfinding, it’s not such a simple problem, and researchers are still interested in it. For example, in 2012, an alternative to the A* algorithm was developed: the Jump Point Search (JPS) method. It allows, when searching under certain conditions, to skip several squares at once and thus save time compared to A*, which can only search for its path square by square.

Pathfinding implementations in video games today are often variations of A* or JPS, adapted to the specific problems of each game.

Pathfinding is an important component of an NPC’s behavioral system. There are others such as line of sight management (NPCs must not see behind their backs or through walls) or interactions with the environment that are necessary before consistent behavior can be implemented. But since they are generally not managed by AI, we won’t spend more time on them. However, we must not forget that the final system requires all these bricks to work. Once our NPC is able to move and interact, we can develop its behavior.

Ad hoc behavior authoring

Ad-hoc behavior authoring is probably the most common class of method to implement AI in a video game. The very term AI in video games still refers mainly to this approach. Ad Hoc methods are expert systems, i.e. systems where you have to manually define a set of rules that will be used to define the AI behavior. They are therefore manual methods for traversing the state space. Their application is therefore limited to agents confronted with a rather limited number of possible situations, which is generally the case for NPCs. Unlike search algorithms like A*, ad-hoc methods may not really be defined as classical AI, but they are considered as such by the video game industry since its beginning.

Finite state machine

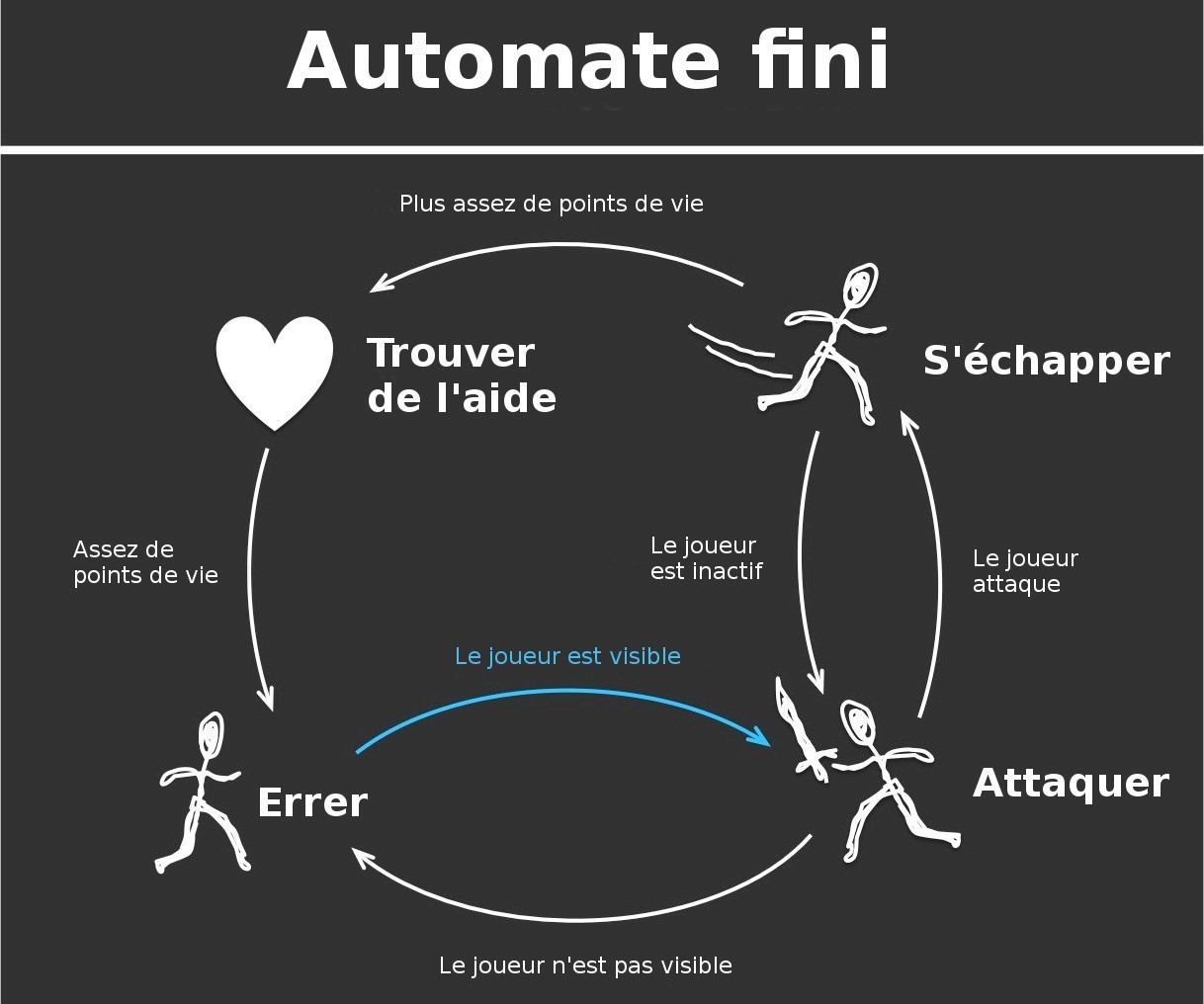

A finite automaton allows to manually define the objectives and stimuli of an agent in order to dictate its behavior. For each objective, one or more states are defined and each state dictates one or more actions for the NPC. Depending on external events or stimuli, the NPC’s objective can change and therefore the automaton can transition from one state to another.

A finite automaton is therefore simply a means of representing the space of an agent’s states as well as all the transitions between its different states. If the states and transitions are well defined, the agent will behave appropriately in all situations. Finite automata are usually represented in the form of a flow diagram as shown in the example below.

The main advantage of finite automata is that they allow you to model a relevant behavior quite simply and quickly since you only need to manually define the rules that define the different states and transitions. They are also practical since they allow you to see visually how an NPC can behave.

However, a finite automaton has no adaptability, since once the automaton is defined and implemented, the behavior of the AI will not be able to evolve or adapt. For the same objective, the NPC will always perform the same actions. It will therefore often present a relatively simple and predictable behavior (although it is always possible to present unpredictability by adding some randomness in transitions for example).

A finite automaton allows to manually define the objectives and stimuli of an agent in order to dictate its behavior. For each objective, one or more states are defined and each state dictates one or more actions for the NPC. Depending on external events or stimuli, the NPC’s objective can change and therefore the automaton can transition from one state to another.

A finite automaton is therefore simply a means of representing the space of an agent’s states as well as all the transitions between its different states. If the states and transitions are well defined, the agent will behave appropriately in all situations. Finite automata are usually represented in the form of a flow diagram as shown in the example below.

The main advantage of finite automata is that they allow to model a relevant behavior quite simply and quickly since it is enough to manually define the rules that define the different states and transitions. They are also practical since they allow you to see visually how an NPC can behave.

Because of their simple design, finite automata allow to complete, modify or debug an AI system relatively easily. It is thus possible to work in an iterative form in order to quickly check the implemented behaviors.

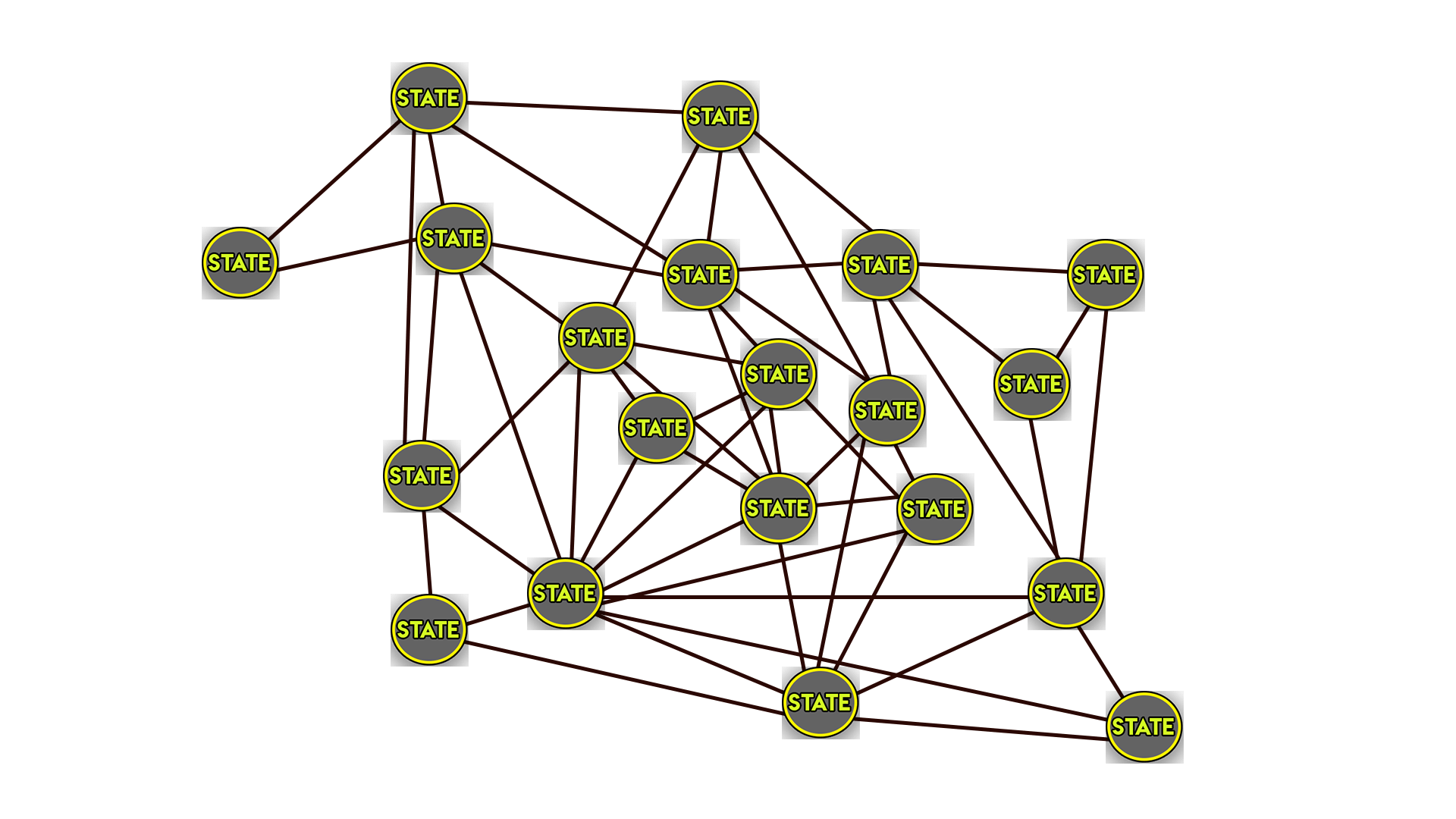

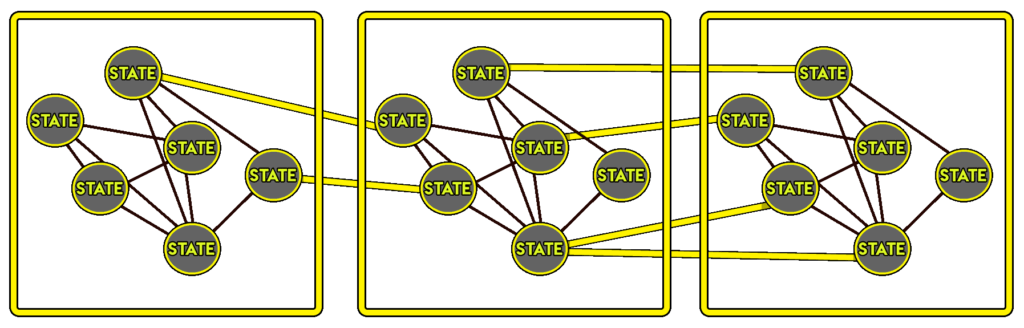

However, when you want to implement a more complex behavior with a finite automaton, it quickly becomes complicated. A large number of states and transitions have to be defined manually and the size of the PLC can quickly impact the ease of debugging and modification.

An evolution of finite automata tries to address this problem: hierarchical finite automata. This approach groups sets of similar states in order to limit the number of transitions between possible states. The objective is to keep the complexity of what we want to model, while simplifying the work of creating and maintaining the model by structuring the behaviors.

Finite automata were the most popular method for building simple behaviors in video games until the mid-2000s. They are still used on some recent games such as the Batman: Arkham or DOOM series (which uses hierarchical finite automata to model the behavior of enemies). However, they are no longer the most frequently used algorithms on the market.

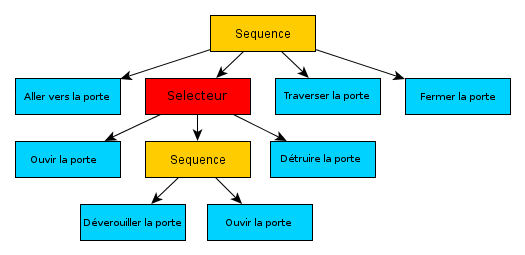

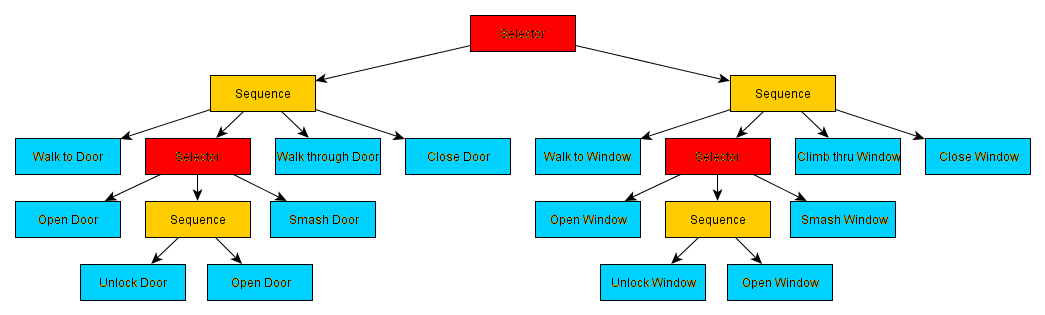

Behavior trees

Behavior trees are quite similar to finite automata, with the difference that they are based directly on actions (and not states), and that they represent the different transitions between actions in the form of a tree. The tree-like representation structure makes it possible to implement complex behaviors much more simply. It is also simpler to maintain, because one gets a better idea of the agent’s possibilities of action by browsing the tree than with a flow chart.

A behavior tree consists of different types of nodes :

- Leaf nodes (above in blue) which have no descendants and represent actions

- The selector nodes (in red) which have several descendants and allow to choose the most relevant one according to the context.

- Sequence nodes (in yellow) which execute all their descendant nodes in order and thus allow to make sequences of actions

A behavior tree is executed from top to bottom and from left to right Starting from the root node (the very first node) to the rightmost leaf. Once you get to the leaf and the action is executed, you return to the root node and scroll to the next leaf.

Thus, in the tree above:

The NPC will go to the door, then depending on the type of door and the items he has on him he will either open the door, unlock it and then open it, or destroy it. He will then go through the door and close it again. We can see that this tree allows you to define different sequences of actions that allow you to reach the same objective: to pass on the other side of a door.

It is of course possible to build much more complete trees that can represent all the behavioral information of the NPC. For example, below is a behavior tree that allows you to go through a door or a window depending on the context.

Behavior trees have globally the same limitations as finite automata. Mainly, they describe a fixed and predictable behavior since all rules and actions are manually defined upstream. However, their architecture in the form of a tree facilitates development and limits errors, thus making it possible to create more complex behaviors.

Behavior trees were first used with Halo 2 in 2004. They have since been used in a number of successful licenses such as Bioshock in 2007 or Spore in 2008 for example.

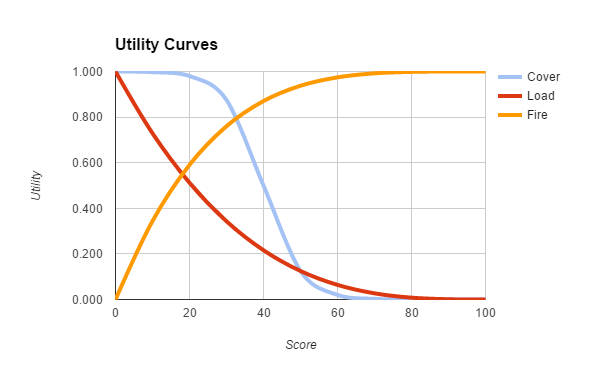

Utility based AI

With this approach, rather than transitioning from one state to another according to external events, an agent will constantly evaluate the different possible actions he can perform at a given moment and choose the action that has the highest utility for him in the present conditions. For each action a utility curve is defined upstream according to the conditions. For example, a utility curve can be defined for shooting the player according to the distance from the player. The closer the player is, the more useful it seems to be to shoot at him to avoid being eliminated. By choosing different types of curves (linear, logarithmic, exponential, etc…) for the different actions, one can prioritize which action to perform under which conditions without having to manually detail all possible cases.

This approach therefore allows the development of complex and much more modular behaviors without having to define a very large number of states. The list of possible actions can easily be completed or modified with this type of approach, which was not the case with finite automata or behavior trees. However, it is still necessary to define all the utility curves and to set them properly to obtain the desired behaviors.

This approach, thanks to its advantages, is increasingly used in video games. Examples include Red Dead Redemption (2010) and Killzone 2 (2009).

In this first part, we have mainly reviewed the classical ad hoc methods. As we have seen, these different methods for modeling behaviors each have their advantages and disadvantages. Here is a small table summarizing the different approaches discussed so far.

A common disadvantage of all these approaches is that the behaviours defined cannot be adapted at all to the way the gambler behaves. In the second article we will therefore look at more advanced methods such as planning or Machine Learning approaches to try to correct this disadvantage.

So far, we have seen ad-hoc methods that manually manage agent actions and stimuli. These methods work well for presenting intelligent behaviors in the short term. However, to design agents that can think in the longer term, it is necessary to use other methods: planning algorithms.

Rather than having to manually define the state space and the different actions to reach a goal, planning is like searching for a solution in the state space to reach a goal.

By using a planning algorithm, we will therefore search for a sequence of actions that allows us to reach a desired state.

Presented for pathfinding, the A* algorithm is not only useful for pathfinding on a grid. Indeed, it can also be used for searching in the space of states and therefore for planning. The state space is often represented in the form of a tree where the nodes are states and the branches are actions. Trees are types of graphs that are particularly suitable for searching.

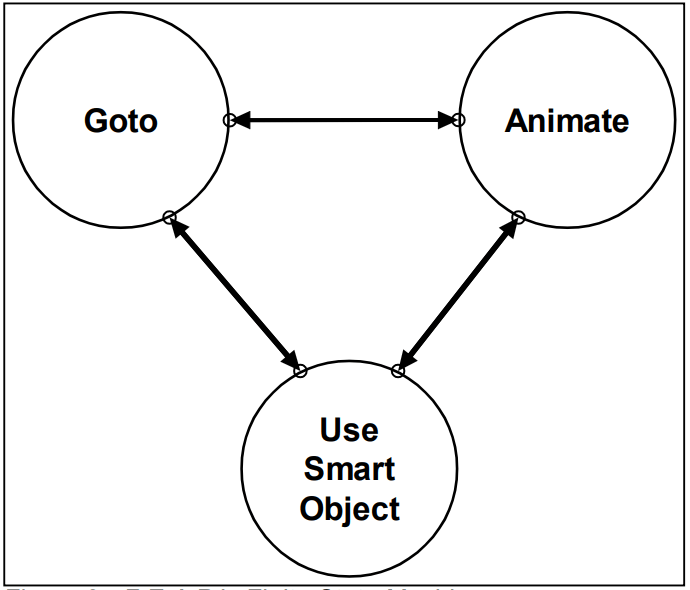

Goal Oriented Action Planning

This approach, used for the first time in 2005 for the F.E.A.R. game, is based on the use of finite automata and a search by A* in the states of these automata.

In the case of a classical finite automaton, the logic that determines when and how to transition from one state to another must be specified manually, which can be problematic for automata with many states as we have seen previously. With the GOAP approach, this logic is determined by the planning system rather than manually. This allows the agent to make his own decisions to switch from one state to another.

With this approach, the state space is represented by a finite automaton. Contrary to the classical approach where we define an automaton with many states that all correspond to a specific action, we will define a small automaton with only a few abstract states. Each abstract state therefore corresponds to large categories of actions that will be context-dependent. For example, the finite automata of the NPCs in F.E.A.R. have only 3 very abstract states. One of the states is used to manage displacements and the two others are used to manage animations. The complex behavior of NPCs is therefore simply a succession of movements and animations.

The interest of the GOAP approach is that, depending on the context in which an objective is defined, several different action plans can be found to solve the same problem. This action plan is constructed through the states of the finite automaton that dictate the movements and animations of the NPC.

Whatever happens, the plan (the sequence of actions) found by the A* algorithm will always be a reflection of the agent’s objective at the present moment. If the NPCs’ objectives are always well defined, they will present a coherent, complex and varied, sometimes even surprising behavior. Indeed, these plans can be more “long term” than those defined manually by a simple finite automaton or a behavior tree. This is why enemies can be seen moving around the player in F.E.A.R for example.

This approach gives very credible behavior in F.E.A.R., but it is not necessarily suitable for all types of games. Indeed, for very open and complex games where the number of possible actions is large, the method may have limitations. In F.E.A.R, the shots found by the search algorithm remain relatively short (usually sequences do not have more than 4 actions), but as the actions and states are defined in an abstract and high level way, a sequence of 4 actions is sufficient to change pieces, move around the player, move objects, take cover etc… If a game needs to find shots with much longer sequences of actions, it is often the case that the algorithm is too heavy in terms of resources and that the shots found diverge too much from the behavior targeted by the designers. Indeed, this kind of approach brings less control over the final result than an ad hoc behavior tree for example.

This is the problem encountered by the developers of the game Transformers: War for Cybertron in 2010. So they decided to change their approach and turned to another planning method: the Hierarchical Task Network planning (HTN) approach. For those who are interested, their issues and the implementation of the approach are explained in this article from AI and Games.

This approach in F.E.A.R was at the time of its release a small revolution in the world of AI in video games and it has since been modified and improved as needed (with the HTN approach for example). For example, this approach can be found in S.T.A.L.K.E.R, Just Cause 2, Tomb Raider, Middle Earth: Shadow of Mordor or Deus Ex: Human Revolution.

The case of strategy games

It is obvious that, depending on the type of game, AI does not have at all the same vocation nor the same possibilities. Most of the methods presented above are used to implement agent behaviors in a world in which the player evolves. Whether it’s a shooting game, or a role-playing game, NPCs are generally characters similar to the player in the sense that they only have control over their own actions. Moreover, a planned behavior in the short or medium term (4 actions for F.E.A.R.) is often sufficient to present a satisfactory behavior.

There are, however, other types of games (especially strategy games) where an agent playing the game must control several units at the same time and implement strategies over the very long term in order to get rid of his opponents. This type of game can be similar to board games such as chess or Go. At any given moment, an agent can perform a very large number of possible actions. The possible actions, relevant or not, depend on the current state of the game (which is derived from all the actions previously taken by the player and his opponent).

In this kind of game, the state space corresponds to the set of possible configurations in which the game can be found. The more complex the game is, the bigger this space is and the more difficult it is for an AI to play it.

Indeed, to be able to play this kind of game, an AI must go through the state space to estimate the relevance of the various possible actions. For a game like chess it is still possible to search in the state space (size 10^47) but for more complicated games like Go it becomes impossible in terms of computing power. Go has about 10^170 states. In comparison, the real-time strategy game Starcraft II has a state space estimated at 10^1685. To give an idea, it is estimated that there are about 10^80 protons in the observable universe.

For a long time AI was not able to beat the best players in board games like chess (it was only in 1997 that AI Deep Blue managed to beat Kasparov, the world chess champion). The game of Go was “solved” only in 2016 thanks, among others, to the emergence of Deep Learning. This means that research and planning methods (such as GOAP in F.E.A.R) cannot work for games like Starcraft and developers had to find other solutions.

The AI of strategy games was therefore based on more simplistic approaches where each unit presented a behavior independent of the others. If each unit is taken independently, its state space is much smaller and it becomes possible to represent it by an ad hoc method (like an automaton or a behavior tree) or to search it to find a sequence of action (in the same way as for NPCs in shooting games for example).

With this approach, it becomes possible to compute the behaviors of all units, but the AI cannot really present a global strategy, as the different units cannot coordinate with each other. So these IAs are generally unable to beat seasoned players.

It is the difficulty of the task that often led the developers of these IAs to cheat, the only solution to present any challenge to the player. It is sometimes still the case today, depending on the type of game. For example in Starcraft II (released in 2010), the highest difficulty levels for the player are managed by cheating AI.

The developers of Starcraft II play here transparency on their AI. Any player who risks himself against the highest difficulty level knows what to expect. Despite this cheating, the game’s AI remains predictable and good players manage to get rid of it without too much difficulty.

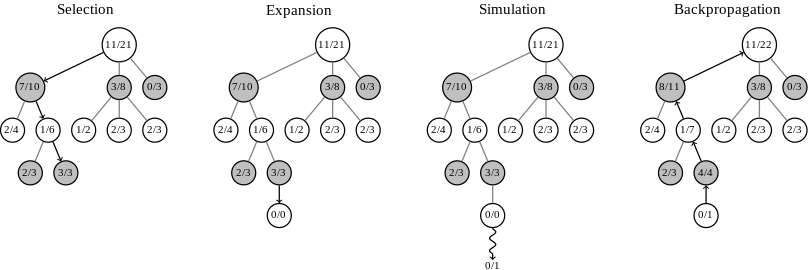

Monte Carlo Tree Search

Recently, a new method has been much talked about for this kind of problems where the space to be searched for is very large. It is the Monte Carlo Tree Search method. Used for the first time in 2006 to play Go, it quickly allowed to improve the systems of the time (which could not yet compete with an amateur player). Then more recently in 2016, it was used as one of the main building blocks of AlphaGo, the algorithm that succeeded in defeating the world Go champion.

This method can of course also be applied to video games. In particular, it was used for the game Total War: Rome II released in 2013. The games in the Total War series feature different game phases:

A turn-based strategy phase on a world map where each player has to manage his country as well as relations with the surrounding countries (diplomacy, war, espionage etc..).

A real-time battle phase on a scale rarely seen in video games (each side often has several thousand units).

The turn-by-turn phase presents a very large space of states in which it is not possible to search for sequences of actions and therefore relevant strategies. It is in this phase of the game that the MCTS method can help. As this phase is played turn by turn, it is possible to take some time to find a good solution. Here the AI system does not have to respond in a few milliseconds as is often the case.

The MCTS method makes it possible to efficiently search for solutions in a space that is impossible to cover completely. The method is based on reinforcement learning concepts, especially the exploration-exploitation dilemma. Exploitation consists in going through the branches of the tree that are thought to be the most relevant to find the best solution. Exploration, on the other hand, consists in going through new branches that we do not know or that we had previously considered irrelevant.

It may seem logical to want to do nothing but exploitation, i.e. always focus on the solutions you think are the best. However the MCTS method is used in cases where it is not possible to search for all the solutions because the tree is too large (otherwise an algorithm like minimax is used). In this case it is not possible to know if the solution we consider is really the best one. Maybe another part of the tree not browsed contains better solutions. Moreover, a branch that is irrelevant at a given moment may become so later, so if we only exploit it we risk missing interesting solutions. The MCTS method allows to find a compromise between exploration and exploitation in order to quickly find satisfactory solutions.

It may seem logical to want to do nothing but exploitation, i.e. always focus on the solutions you think are the best. However the MCTS method is used in cases where it is not possible to search for all the solutions because the tree is too large (otherwise an algorithm like minimax is used). In this case it is not possible to know if the solution we consider is really the best one. Maybe another part of the tree not browsed contains better solutions. Moreover, a branch that is irrelevant at a given moment may become so later, so if we only exploit it we risk missing interesting solutions. The MCTS method allows to find a compromise between exploration and exploitation in order to quickly find satisfactory solutions.

The objective of the Backpropagation step is to ensure that the nodes that seem most relevant are chosen more frequently in the next Selection steps. It is during the Selection phase that the compromise between exploration and exploitation is made. The idea of the MCTS method is to run these four simulation steps thousands of times and when making a decision, to choose the node that has given the most victories over all the simulations.

The advantage of the method is that a maximum execution time can be defined. Up to the allocated time limit, the algorithm will run simulations. Once the time has elapsed it is enough to take the node with the highest victory rate. The more time it is given, the more likely it will find good solutions since the calculated statistics will be accurate. Thus, the solution finally found by MCTS may not be the optimal solution, but it will be the best solution found in the given time. This approach allowed the agent playing in the turn-based strategy phase of Rome II Total War to present relatively advanced strategies despite the very complex state space of the game.

Funnily enough, the AI system for real-time battles is based on ad hoc methods from the Art of War by Sun Tzu (around 500 BC).

A relevant quote even for an AI system

These strategy rules can also be successfully applied in a video game. For example:

- When you surround the enemy, leave an exit door

- Do not try to block the enemy’s path on open ground.

- If you are 10 times more numerous than the enemy, surround him.

- If you outnumber the enemy 5 times, attack him head-on.

- If you are twice as many as the enemy, divide it up.

These simple rules can easily be implemented in an ad hoc system and allow the AI to present relevant behavior in many situations and thus propose realistic battles.

What about machine learning?

Not all the methods presented so far offer learning. Behaviors are governed by manually defined rules and by the way these rules are considered in relation to the environment. To get more complex AIs that can really adapt to various situations, it seems necessary to set up systems that can automatically learn to act according to the player’s behavior. The branch of automatic learning in AI is called Machine Learning.

Machine learning methods have sometimes been used to code the behavior of agents. For example, units in real-time battles in Total War games are controlled by perceptrons (the neural networks ancestors of Deep Learning). In this kind of implementation the networks are very simple (far from the huge networks of Deep Learning).

The inputs of the networks correspond to the state of the game (the situation) and the outputs correspond to the action taken. The learning of these networks is done upstream during the development of the game (sometimes even manually for the simplest networks). This kind of method is ultimately similar to ad hoc methods since the behavior of the units is fixed by the developer and does not evolve during the game.

However, there have been cases where agents could really learn during the game. For example in Black and White (released in 2000) which is known to have managed to mix different AI approaches and get a good result. The principle of the game is very much based on the interaction between the player and a creature with complex behavior.

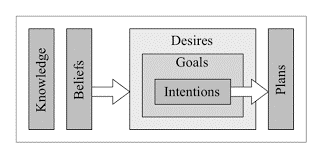

The creature’s decision-making behavior is modeled according to a belief-desire-intention (BDI) agent. The BDI approach is a model of psychology, which is here adapted for video games. This approach, as its name indicates, models, for an agent, a set of beliefs, desires and intentions that dictate his decision making.

The beliefs correspond to the state of the world as seen by the agent. These beliefs can be false if the agent does not have access to all the information. Desires, based on beliefs, represent the agent’s motivations and therefore the short and long term goals he or she will seek to achieve.

Intentions are the action plans that the agent has decided to carry out to achieve his or her objectives. In Black and White, the creature’s beliefs are modeled by decision trees and desires are modeled by perceptrons. For each desire, the creature bases itself on its most relevant beliefs.

The BDI model represents the creature’s decision-making system, but at the beginning of the game, she is like a child whose beliefs and desires are not well defined. During play, the creature learns how to behave through its interactions with the gambler. The gambler may reward or punish the creature based on its actions. Through a reinforcement learning algorithm the creature will discover which actions result in a reward and which result in a punishment. It will then model its behavior to be consistent with what the player asks it to do.

The creature’s behavioral system is therefore a hybrid system based on an ad hoc AI base (the BDI model) for which each component is a self-learning algorithm. These hybrid systems are probably the best compromise to obtain complex and evolutionary behaviors but still remain within an initially defined framework.

Why do we stay on ad hoc then?

This kind of more advanced approach with machine learning is however really not the majority of implementations in video games, and most of the AI recognized as very successful recently like the companion AI in Bioshock Infinite are usually very complex and well thought out ad hoc systems to fit perfectly into the gameplay (with all the cheating that this may require, like teleporting the companion when the player can’t see him for example).

If developers keep on clinging to the classical ad hoc AI methods, it’s firstly because in the end, these methods work well (for example in F.E.A.R or Bioshock Infinite). Indeed, what the designers are trying to create is an illusion of intelligence that is credible to the player, even if the model that handles NPCs is ultimately very simple. As long as the illusion is present, the contract is fulfilled. However, setting up this type of system can in fact quickly become very complex depending on the type of game and therefore require a lot of time and development resources. The majority of AI failures in video games is probably due to a too low investment in AI development rather than to unsuitable methods.

The second important reason that forces developers to stay on systems without learning is the difficulty in predicting AI behavior in all situations. Game designers fear that an AI that learns automatically may break the player’s gaming experience by presenting an unexpected and inconsistent behavior that would take the player out of his immersion in the game world (although it can be noted that this is precisely what happens with a poorly implemented ad hoc AI system). Designers want to fully imagine the experience the player will have and this is much harder to define with an AI that learns automatically.

Another important point is that the NPC’s behavior must be a minimum predictable and present codes that are known and expected by the players. These behavior codes are inherited from the first games that defined the styles and their evolution.

For example, in infiltration games the guards usually do rounds following a specific pattern that the player has to memorize in order to pass without being seen. Implementing a more complex behavior with an AI can risk changing the classic experience of undercover games and is therefore a choice to be made with full knowledge of the facts. Another similar case is boss fighting. In many games boss fights are special phases where the boss has a predetermined behavior that must be understood by the player in order to win.

A boss that adapts too much to the player’s way of playing may surprise players formatted by their past experiences. When implementing an AI in a game, you need to be able to do it while playing with the many clichés of the medium. That’s why the most innovative approaches in terms of AI nowadays are often linked to new game concepts, as classic styles are sometimes considered too rigid to accommodate this kind of AI.

Finally, another reason to stay on ad hoc methods seems to be that this is the way it has always been done in the field, and that starting to innovate without any assurance of success in a framework as limited in terms of time and resources as the development of a video game seems to be a fairly significant risk to take. Especially for development studios whose health may depend on the success or otherwise of a single game.

Evolution of methods

As we have seen, AI methods in video games have evolved over time to become increasingly complex and sophisticated. This makes you wonder why when you play, you have the feeling that AI is stagnating and not really improving. The answer is most likely that games are getting bigger and more complicated and therefore the tasks that IAs have to solve to be consistent are getting more and more complex.

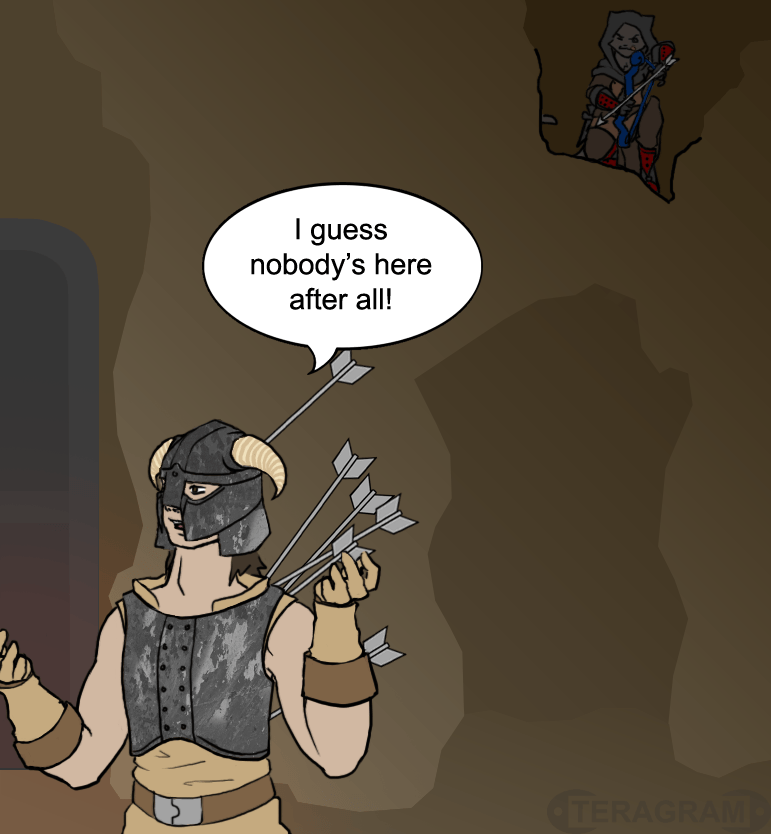

Thus, the games where AI has been a great success are often games where the domain was relatively small. For example in F.E.A.R which focuses on small-scale clashes. The more games are open, the more likely it is that the AI is caught in default. We can take the example of the AI of the bandits in the role-playing game Skyrim.

These have fairly obvious flaws that can lead to the kind of incongruous situations depicted in the image above. The problem in Skyrim is that the NPC AI must be able to handle a very large number of different situations, so there are most likely situations where it will not be suitable.

Another problem in games that are too big is that the amount of content that needs to be generated for this world to be consistent becomes very important. If you want each NPC to be unique and have a story of its own, it’s a huge amount of work. That’s why all the guards in Skyrim all took an arrow in the knee in their youth when they were adventurers.

AI can help us with this content issue through what is called procedural content generation. In the rest of this article we will therefore focus on other less frequent ways to implement AI in video games, and in particular with procedural content generation.

Procedural generation

As we have seen in previous articles, AI in video games is mainly used to implement the behaviors of non-player characters (NPCs). This approach has been so important that other issues potentially addressed by AI have long been neglected. Recently, this kind of alternative approach has started to develop more and more, the main one probably being procedural content generation.

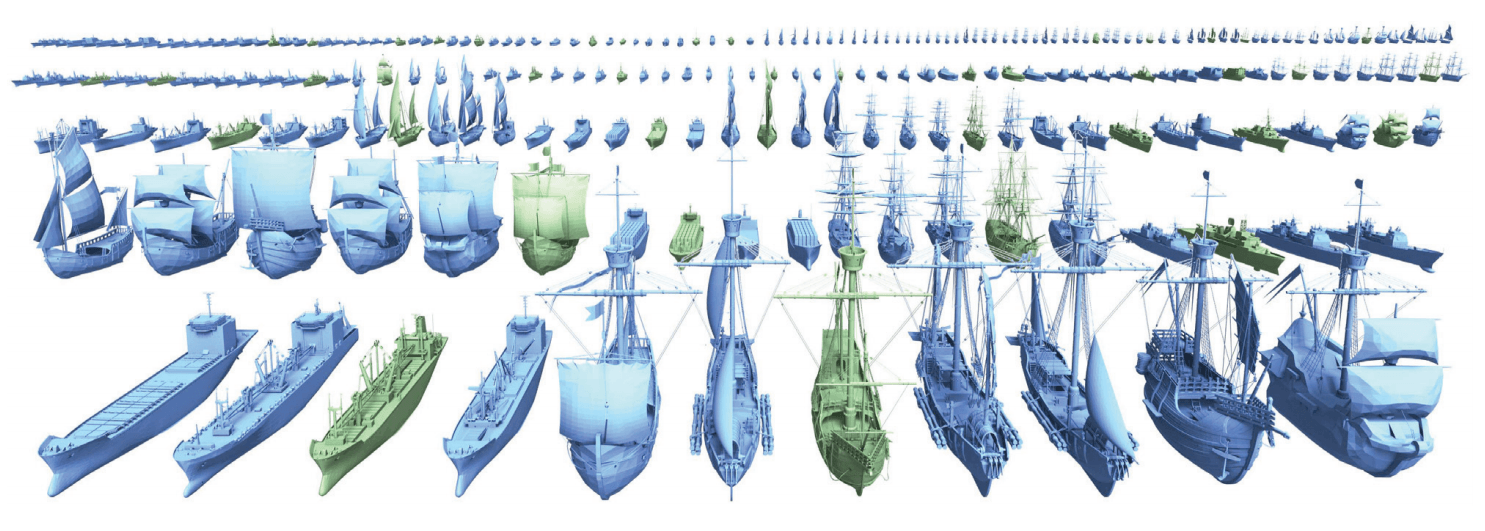

Procedural content generation refers to methods that allow to generate content in a game with little or no human intervention. The content generated can be very varied in nature and can affect almost an entire game. Examples include: levels, terrains, game rules, textures, stories, objects, quests, weapons, music, vehicles, people,… In general, it is considered that the behavior of NPCs and the game engine do not correspond to the content itself. The most frequent use of procedural generation is probably the creation of levels and terrains.

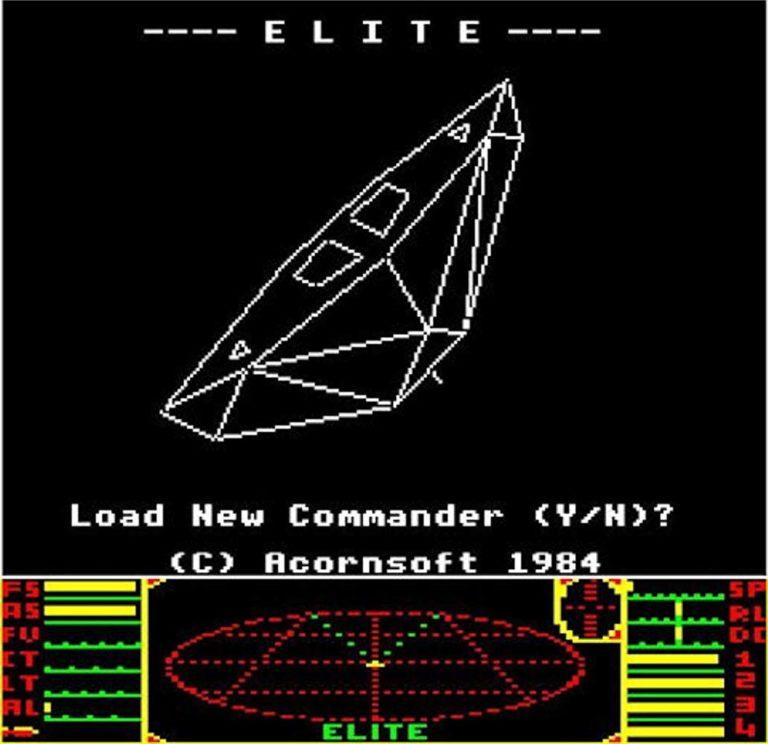

The use of procedural generation has exploded since the mid-2000s, however the field has been around for a very long time. Indeed, in 1980 the game Rogue was already using procedural generation to automatically create its levels and place objects in them. In 1984, Elite, a space commerce simulation game, generated new planetary systems (with their economy, politics and population) during the game.

Objectives

What is the interest of the procedural generation? The first games used procedural generation to reduce storage space. Indeed, by generating the game textures and levels on the fly, it is no longer necessary to store them on the cartridge or other game medium. This made it possible to go beyond the physical limits imposed by the technologies of the time and thus make potentially much larger games. For example, Elite had several hundred planetary systems requiring only a few tens of kilobytes (kb) on the machine.

Nowadays this is no longer the main reason to use procedural generation. Above all, it makes it possible to generate large quantities of content without having to design everything by hand. In the development of a game, the content creation phase can be very heavy and take a large part of the budget. Procedural generation can accelerate the creation of this content.

Anastasia Opara (SEED – Search for Extraordinary Experiences Division – at Electronic Arts) presents in this conference her vision of content generation. For her, content creation presents a phase of creativity that corresponds to the search for the idea and artistic creation, followed by a phase of manufacturing and implementation of this idea in the video game. She presents this phase as a mountain requiring special skills and a very significant investment of time to be overcome. The procedural generation of content can be an approach to try to reduce the size of this mountain and thus allow artists to focus mainly on pure creativity while abstracting from the difficulty of implementation.

In addition to facilitating the content-making phase, procedural generation can also encourage artists’ creativity by offering unexpected and interesting content. The procedural generation thus presents an aspect of collaboration between the artist and the machine. One can thus find galleries of procedurally generated artworks.

The procedural generation of content could thus promote human creation and allow the creation of new game experiences and even potentially new genres of play. One could thus imagine that games could be customized much more than what currently exists by dynamically modifying the game content according to the interactions with the player.

Finally, with a procedural generation capable of producing content in sufficient variety, quality and quantity, it seems possible to create games that never end and therefore with ultimate replayability.

However, as developers have realized (notably those of No Man’s Sky), the amount of content in a game does not necessarily make it interesting. There is therefore a vital compromise to be found between the quantity of content that is generated and its quality (interesting, varied, etc…).

For example, in the Elite game presented earlier, the designers planned to generate 282 trillion galaxies (that’s a lot). The publisher of the game convinced them to limit themselves to 8 galaxies, each composed of 256 stars. With this more measured number of stars to be created, the game’s generator was able to make each star relatively unique, which would have been impossible with the number of galaxies initially planned. Thus the game proposed a good balance between size, density and variability. With the procedural generation of content, one can quickly fall into the trap of a gigantic universe where absolutely everything looks the same. Each element will be mathematically different, but from the player’s point of view, once he has seen one star, he has seen them all.

There is therefore generally a balance to be found between the size of the world, its diversity and the developer’s time allocation. Generating the content through mathematics is the scientific part, while finding the right balance between the different elements is the artistic part.

The different approaches

There are many approaches to procedural content generation and not all of them are based on Artificial Intelligence. Often simple methods are used, such as NPCs’ behavioral design, in order to maintain control over the final rendering more easily. Indeed, the generated content must respect certain constraints in order to be well integrated into the game.

We will therefore first focus on the main classical methods of procedural generation, then we will give an overview (non-exhaustive) of the approaches based on AI.

Constructive procedural generation

Tiles-based approach

The simplest and most frequently used method is the tile-based method. The idea is simple: the game world is divided into subparts (the tiles), for example rooms in a dungeon exploration game. A large number of rooms are modeled by hand. When generating the world, the pre-modelled rooms are randomly selected to create a larger universe. If a large enough number of tiles have been defined beforehand, through the magic of combinatorics, you can obtain a world generator that will almost never produce the same result twice.

With this approach, you get a diversity of results while ensuring local consistency since each tile has been defined manually. In general, this type of approach also implements specific business rules to ensure that the result will be playable and interesting.

The simplest and most frequently used method is the tile-based method. The idea is simple: the game world is divided into subparts (the tiles), for example rooms in a dungeon exploration game. A large number of rooms are modeled by hand. When generating the world, the pre-modelled rooms are randomly selected to create a larger universe. If a large enough number of tiles have been defined beforehand, through the magic of combinatorics, you can obtain a world generator that will almost never produce the same result twice.

With this approach, you get a diversity of results while ensuring local consistency since each tile has been defined manually. In general, this type of approach also implements specific business rules to ensure that the result will be playable and interesting.

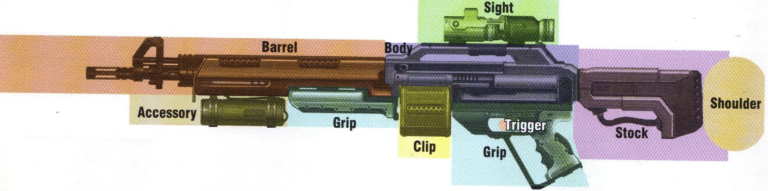

Weapons are cut into sub-parts which are interchangeable. Each weapon generated is a random draw of the different subparts. Each of these has an impact on the weapon’s statistics such as loading time, type of ammunition, accuracy, etc., so that all the generated weapons are slightly different from each other.

Fractal approaches

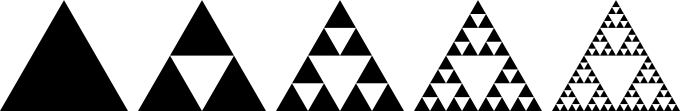

Fractal approaches get their name from the famous mathematical objects since they have some similar properties. Indeed, these procedural generation approaches are based on systems of successive layers at different scales, a bit like fractals. We will see two types of fractal approaches: noise-based methods and grammar-based methods.

Noise-based approaches

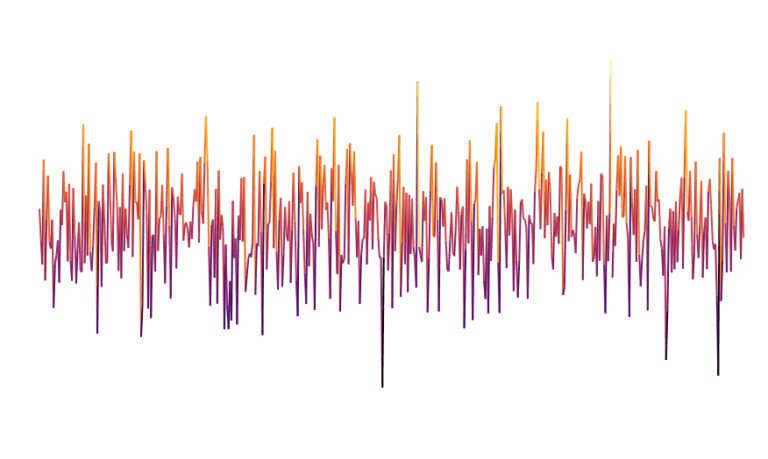

When observing the world from an airplane, the layout of the terrain seems to be relatively random. The idea of noise-based approaches is to rely on randomness to generate terrains that are close to those of the real world. When a sequence of values is randomly drawn, we get what is called white noise.

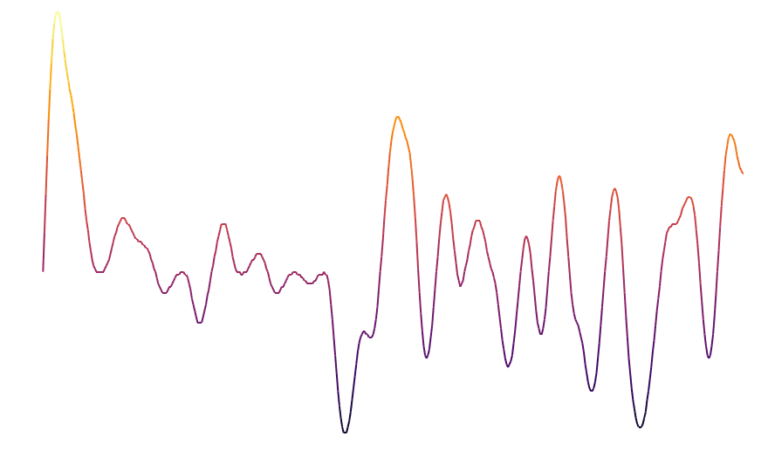

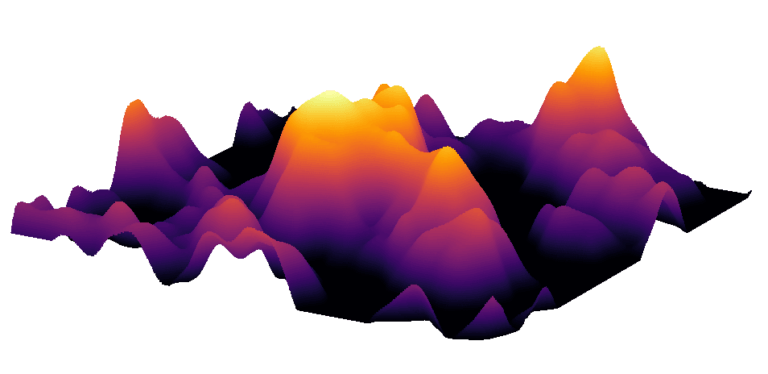

White noise is not a great help for procedural generation since it is the representation of a completely random process (which is clearly not the case in our world). However, there are other types of noise that represent randomness while maintaining a local structure. Probably the best known in the context of procedural generation is probably the Perlin noise. This noise is generated by a random process, however the nearby points are no longer independent of each other as for white noise. This makes it possible to obtain curves like the one shown here which are locally smoother.

Algorithms based on noise become interesting especially when the number of dimensions is increased. Thus, if we take a two-dimensional grid and make a print of white noise and Perlin noise, we obtain the following results. We have white noise on the left and Perlin noise on the right. Each pixel is colored according to its value.

This gives a value for each pixel of the grid. We can then consider the value of this pixel as an altitude and display the grid in 3 dimensions. One thus obtains a representation of a mountainous terrain, entirely generated by Perlin noise. The problem with Perlin noise is that it will tend to generate repetitive elements of relatively similar size. We thus risk obtaining a mountainous but very empty world, without any details between the mountains.

To avoid this problem, several layers of Perlin noise are used at different scales (called octaves). Thus the first layer is used to generate the coarse-grid geography of the world and the following layers add successively finer and finer levels of detail. Thus we find again the idea of fractals.

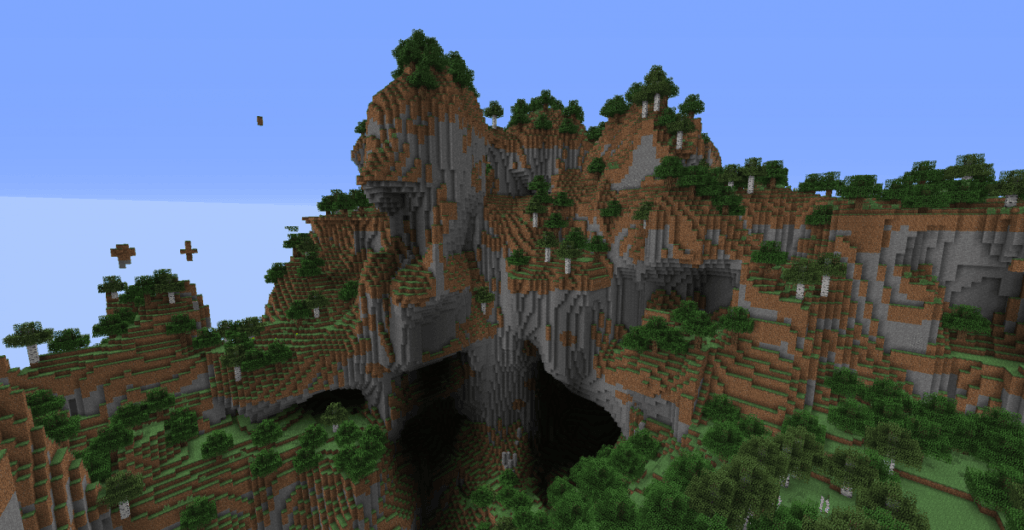

Perlin noise is probably the most used approach for procedural generation since terrain generation is a recurring need in video games. This approach is notably used by Minecraft to generate its worlds.

Many variations exist around the Perlin noise, as it often needs to be adapted to the specific needs of each game. We can mention the Simplex noise which is an improvement of the Perlin noise (proposed by the inventor of the Perlin noise – Ken Perlin – himself). In the game No man’s sky where almost the entire universe is generated procedurally, the planets are based on what the designers call “Uber noise” which is an approach based on the Perlin noise and combining many other types of noise. Their approach is supposed to give more realistic (and therefore more interesting for the player) results than those based only on Perlin noise.

Grammar-based approaches

A formal grammar can be defined as a set of rules that apply to text. The rules of grammar allow you to transform one string of characters into another. For example, the rules of grammar can be used to transform one string of characters into another:

A -> AB

B -> A

With the first rule every time there is an “A” in a string it will be transformed into an “AB” and with the second rule a “B” will be transformed into an “A”.

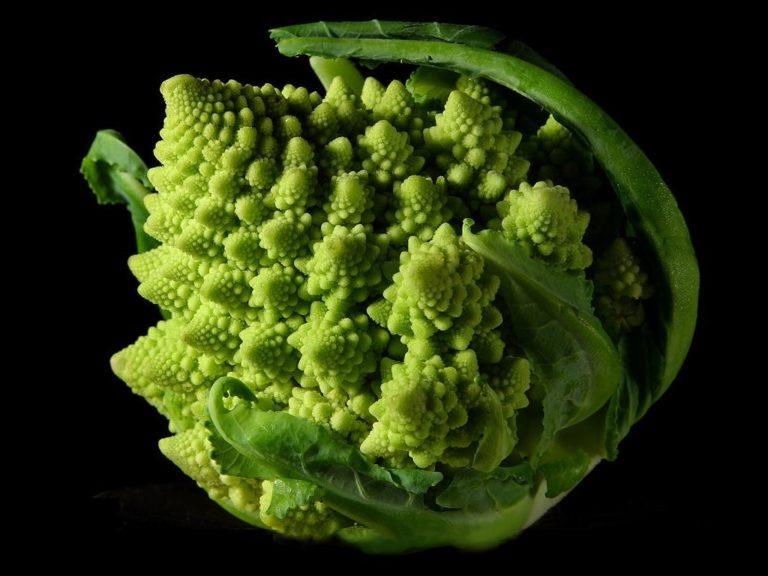

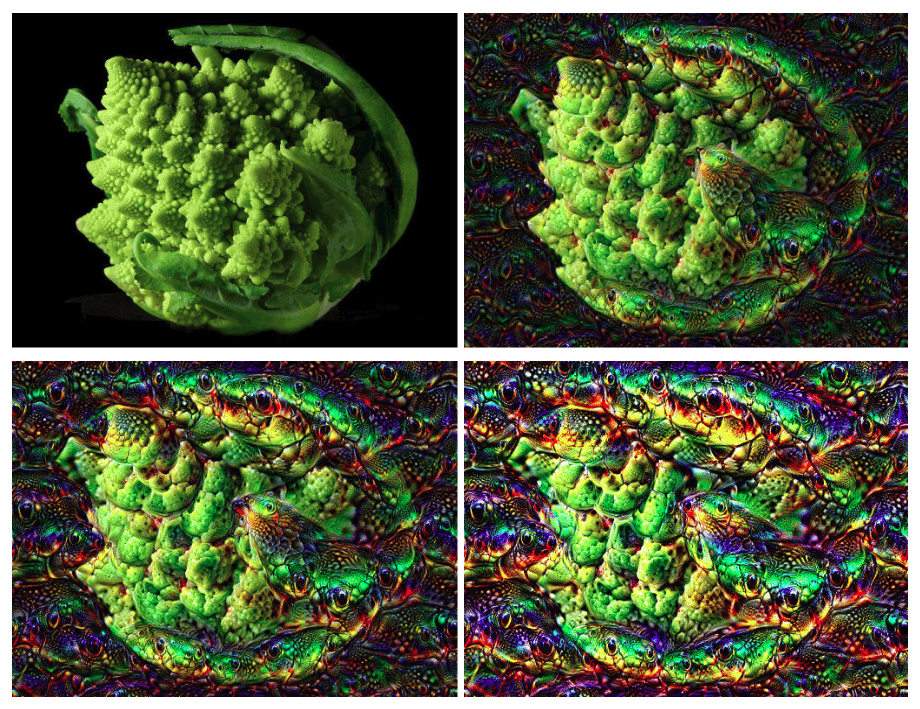

Formal grammars have many applications in computer science and AI, but we will focus on a particular type of grammar called L-systems. L-systems have the particularity of being grammars designed for plant generation. L-systems have recursive rules, which makes it easy to generate fractal forms. In nature many plants have fractal forms, Romanesco cabbage being one of the most striking examples.

An L-system is defined by an alphabet, a set of rules, modifications and a starting axiom (which is the character string we consider to start)

Let’s take a simple example:

A restricted alphabet : {A,B}

Two simple rules:

A → AB

B → A

The starting axiom: A

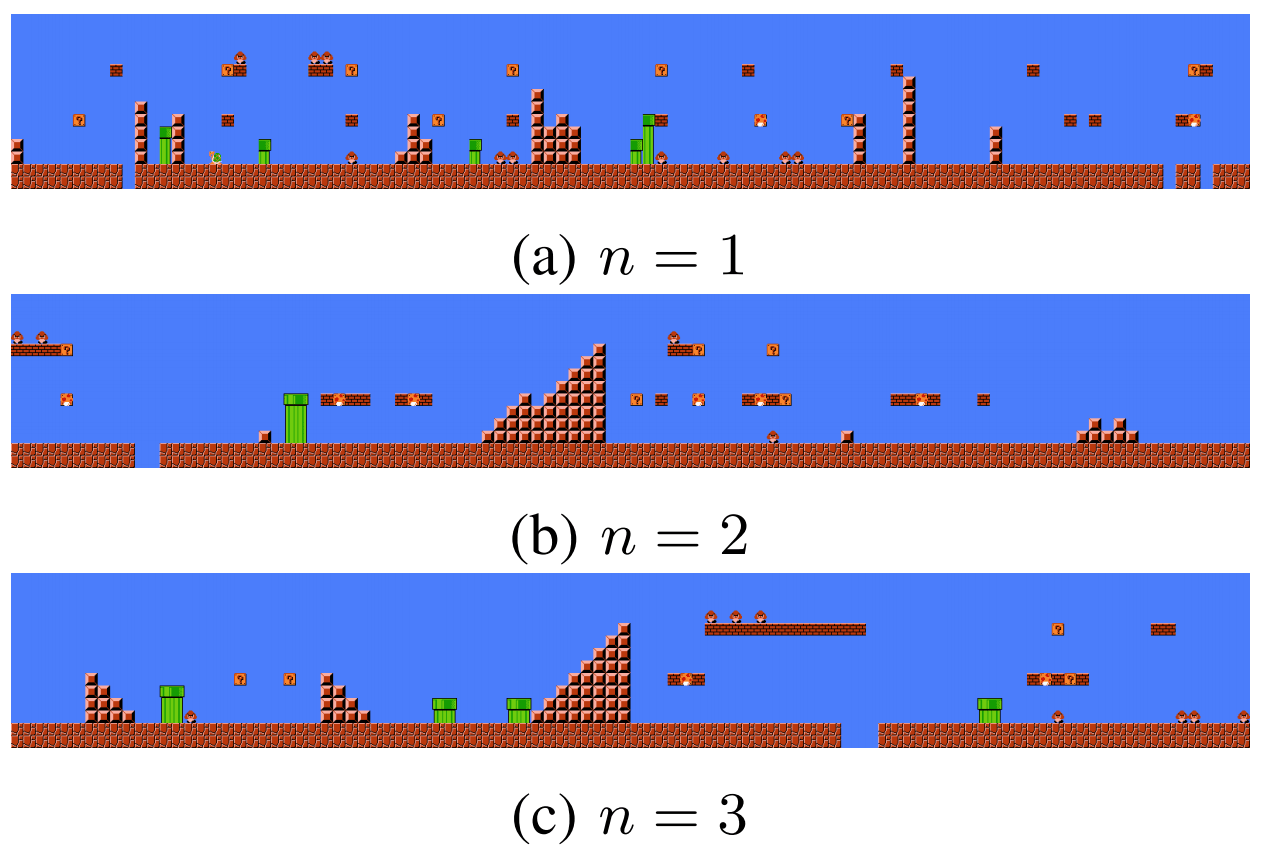

The idea is to apply several times the rules to the previously obtained result (n being the number of times the rule has been applied).

We obtain the following results:

n = 0 -> A

n = 1 -> AB

n = 2 -> ABA

n = 3 -> ABAAB

n = 4 -> ABAABABA

n = 5 -> ABAABABAAB

n = 6 -> ABAABABAABABAABABA

n = 7 -> ABAABABAABAABABAABABAABAABAABAAB

One may wonder where is the link between this string generation and the plants. The idea is to associate to each character of the alphabet a drawing action. The generated strings will therefore be a kind of drawing action.

For example we can consider an L-system with the following alphabet:

F: draw a line of a certain length (e.g. 10 pixels)

+ : turn left 30 degrees

– turn right 30 degrees

: keep track of current position and orientation

[ ] : return to the position and orientation in memory

Only one rule is defined in the grammar :

F → F[-F]F[+F][F]

The starting axiom is: F

Thus, for the different iterations, we obtain the following results. (The colors allow to make the link between the generated strings and the equivalent drawings)

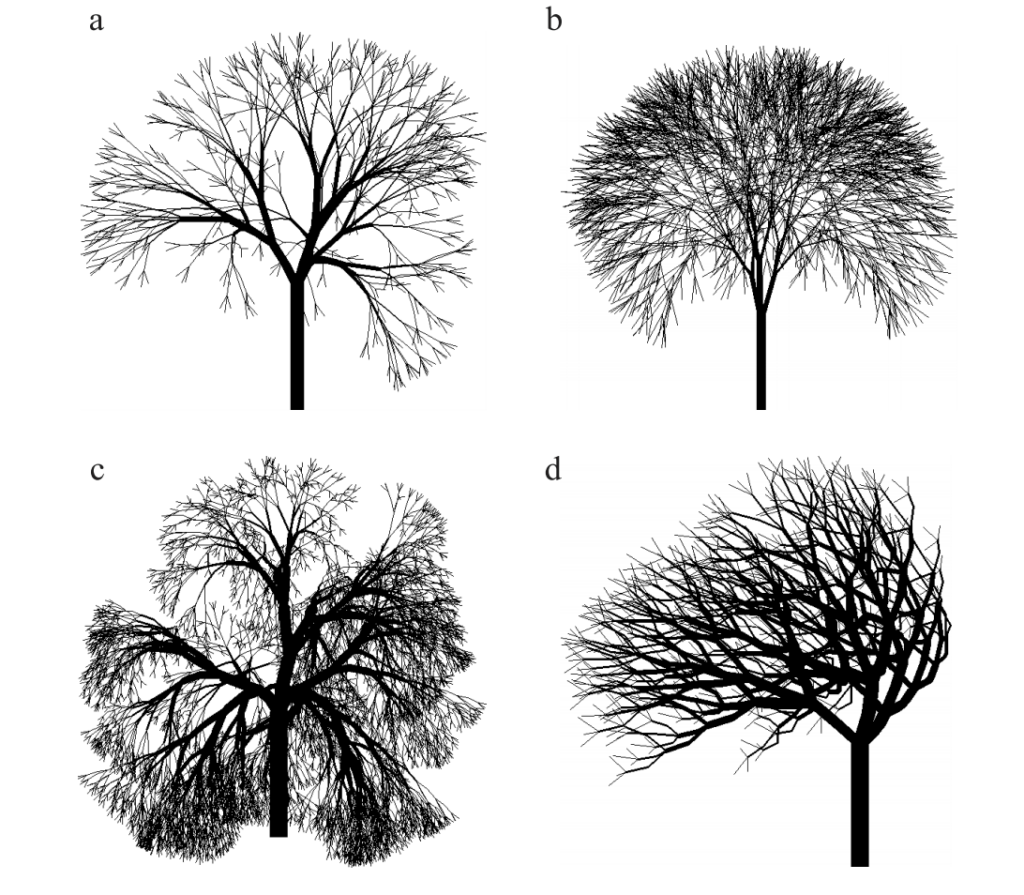

This allows the rule to continue to be applied until a satisfactory result is obtained. The results obtained with this grammar may seem simplistic, but with the right grammar rules and by iterating the algorithm long enough, one can obtain very convincing results such as those presented below. Then you just have to add textures and leaves to obtain a nice vegetation.

L-systems have the advantage of being very simple to define in theory (a single grammar rule can be sufficient). In practice, however, things are not so simple. Indeed, the links between axiom, rule and results in the form of drawings after expansions are very complex. It is therefore difficult to predict the result beforehand and trial and error seems necessary. This site allows you to play with L-systems in order to visualize the predefined grammar results, as well as to modify the rules of these grammars and see the impact on the results.

Another disadvantage is that L-systems are limited to the generation of geometric shapes that have fractal properties. This is why they are mainly used for the generation of plants.

However, there are many extensions to the basic principle of L-systems, such as systems with randomness or context-sensitive systems. These extensions make it possible to produce more varied content. For example, L-systems have sometimes been used to generate dungeons, rocks or cellars.

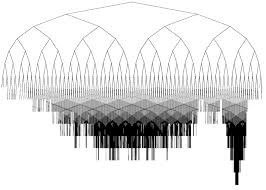

The image presented earlier in the article with a night tree was generated by a grammar system (more complete than the one presented here). This link presents the rules used to generate the image. The results obtained by these methods can be superb. By slightly modifying the grammar rules, different images can be generated.

Cellular automata-based approaches

Cellular automata are discrete calculation models based on grids. Invented and developed in the 1950s mainly by John Von Neumann, they were mainly studied in theoretical computer science, mathematics and biology.

A cellular automaton is therefore based on a grid. The principle is that each cell composing the grid can have different states (for example two possible states: a living state and a dead state). The states of the cells evolve according to the state of the neighbouring cells at a given moment. In cellular automata, time advances in a discrete way, i.e. step by step.

Let’s take the example of the most famous cellular automaton: Conway’s Game of Life to better understand.

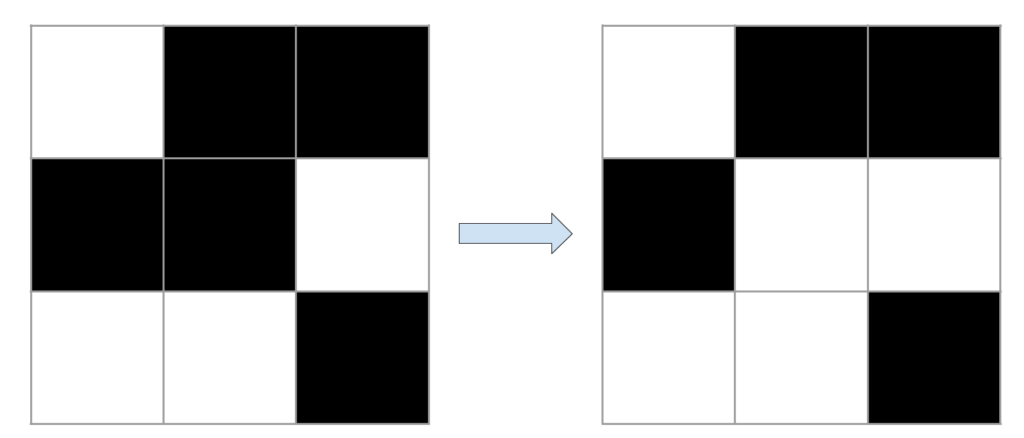

The game of life can be described by two simple rules. At each stage, the state of a cell evolves according to the state of its eight neighbors:

If a cell is dead and has exactly three living neighbors, it goes into the living state.

If a cell is alive and has two or three living neighbors it keeps its state, otherwise it passes to the dead state.

Let’s take some visual examples. Living cells are shown in black and dead cells in white. For each example, it is the state of the middle cell that interests us.

The examples presented above are very simple since we are only interested in the middle box of a very small grid. In general, when studying the behavior of cellular automata, much larger grids are used. In these large grids, more complex shapes can appear and evolve. For example, with the right initial conditions, it is possible to create shapes that move around in the grid. These shapes are called vessels. By considering larger grids and more complex initial conditions, much larger structures can evolve. When the grids become too large, the boundaries between cells are usually no longer represented for better visualization.

Here is an example of a much larger vessel that leaves in its wake objects capable of generating new small diagonal vessels. We can see that a great complexity can appear from an automaton with very simple rules (only two rules for the game of life).

It is possible to build a whole bunch of machines in the game of life and even functional computers (See this link for an example). They are of enormous complexity and extremely slow but they prove that it is possible to get very complex systems from very simple rules. All the elements of a computer (memory, calculation unit, program etc…) are built with different types of objects that it is possible to obtain in the game of life. For example, the bits of information in these computers are represented by small ships. For those of you who want to learn more about the game of life, you can check out this excellent video.

Objects in the game of life generally show behaviors that resemble those found in nature since their evolution is based on simple rules. Cellular automata have therefore been used extensively to model environmental systems. It is for this reason that they are interesting in the context of procedural content generation.

They have thus been used in video games to model rain, fire, fluid flows or explosions. However, they have also been used for map or terrain generation.

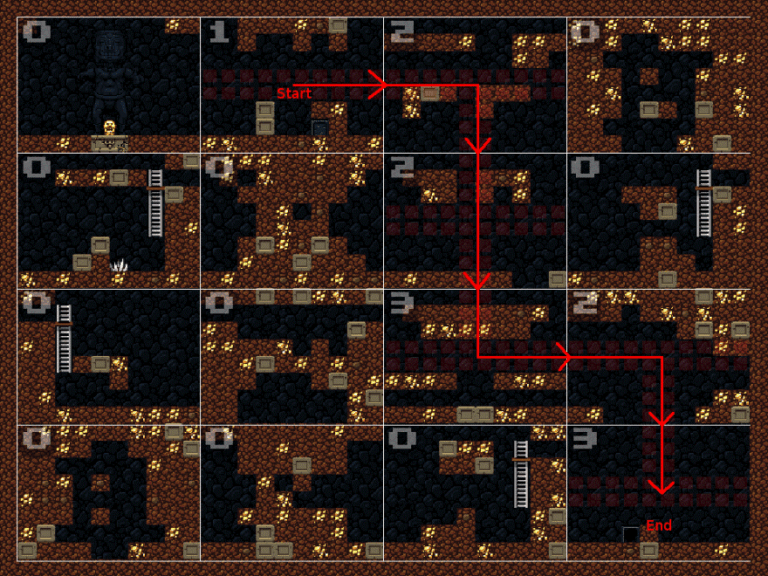

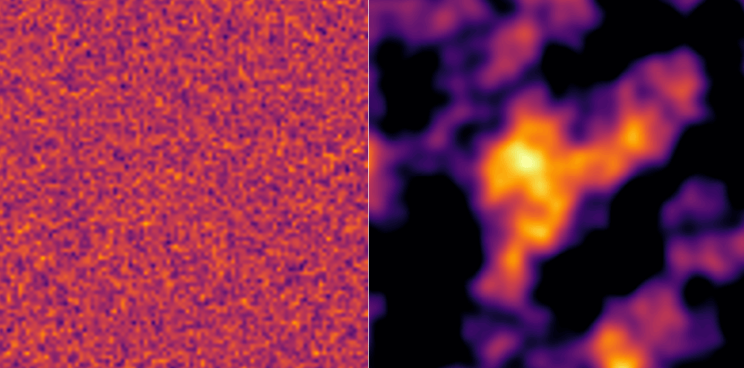

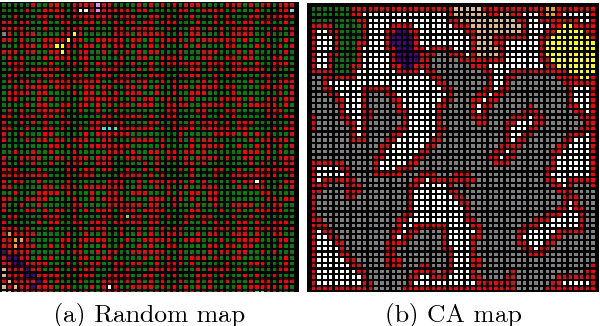

For example, in a game of exploration of a cellar in 2 dimensions seen from above.

In this game, each room is represented by a 50*50 cells grid. Each cell can have two states: empty or rock.

The cellular automaton is defined by a single rule of evolution:

A cell becomes or remains rock type if at least 5 of its neighbors are rock type, otherwise it becomes or remains empty.

The initial state is created randomly, each cell having a 50% chance of being in one of the two states.

By making the grid evolve from its initial state (left) during several time steps, we obtain a result as on the image on the right with grey empty cells in the middle and red and white rock cells. The other colors indicate areas of voids that are not accessible because they are surrounded by rocks. We notice that the cellular automata allow us to represent erosion.

To obtain larger areas, several grids can be generated and then grouped into a new larger grid.

By changing the number of cells to be considered, the rules of the automaton as well as the number of evolution steps, different types of results can be obtained.

Cellular automata (and constructive algorithms in general) have the advantage of having a small number of parameters. They are therefore relatively simple to understand and implement. However, it is difficult to predict the impact on the final result of the change of a parameter since in general the parameters impact several elements of the generated result. Moreover, it is not possible to add criteria or conditions on the result. We cannot therefore ensure the playability or solvency of the generated levels since the gameplay characteristics of the game are independent of the parameters of the algorithm. It is therefore often necessary to add a processing layer to correct possible problems. Thus in the previous example with the cellular automaton it was necessary to create tunnels manually if the generated grids did not have a path.

In the following article, we will therefore focus on more complex methods, based on research or Machine Learning and which allow, among other things, to add conditions and criteria on the generated results.

Procedural generation approaches with AI

Now that we have seen the main classical approaches called constructive methods, let’s look at AI-based approaches. These approaches can be hybridized and mix AI with more classical methods such as those presented previously. Most of the methods presented in this part are not yet really implemented in commercial games but it seems quite likely that they will gradually make their appearance since they allow to go even further in the procedural generation.

Research-based methods

As we have seen in previous articles, search algorithms are an important part of AI, so they are naturally found in the field of procedural generation. With this approach, an optimization algorithm (often a genetic algorithm) searches for content meeting certain criteria defined upstream. The idea is to define a space of possibilities that corresponds to the whole content that can be generated for the game. We consider that a satisfactory solution exists in this space and we will therefore run through it with an algorithm in order to find this satisfactory content. Three main components are necessary for this type of procedural generation:

A search algorithm. This is the heart of the approach. In general a simple genetic algorithm is used but it is possible to use more advanced algorithms that may take into account constraints or specialize on a particular type of content.

A representation of the content. This is how the generated content will be seen by the search algorithm. For example for level generation, levels could be represented as vectors containing information such as the geography of the level, the coordinates of the different elements present in the level and their type etc… From this representation (often vectorial), it must be possible to obtain the content. The search algorithm scours the space of the representations to find the relevant content. The choice of the representation thus impacts the type of content that it will be possible to generate as well as the complexity of the search.

One or more evaluation functions. These are modules that look at a generated content and measure the quality of this content. An evaluation function can for example measure the playability of a level, the interest of a quest or the aesthetic qualities of generated content. Finding good evaluation functions is often one of the most difficult tasks when generating content with search.

Search-based methods are without a doubt the most versatile methods since almost any type of content can be generated. However, they also have their shortcomings, the first being the slowness of the process. Indeed, each content generation takes time since a large number of candidate contents must be evaluated. Moreover, it is often quite difficult to predict how long it will take the algorithm to find a satisfactory solution. These methods are therefore not really applicable in games where content generation must be done in real time or very quickly. Moreover, to obtain good results it is necessary to find a good combination of a search algorithm, a representation of the content and a way to evaluate this content. This good combination can be difficult to find and require iterative work.

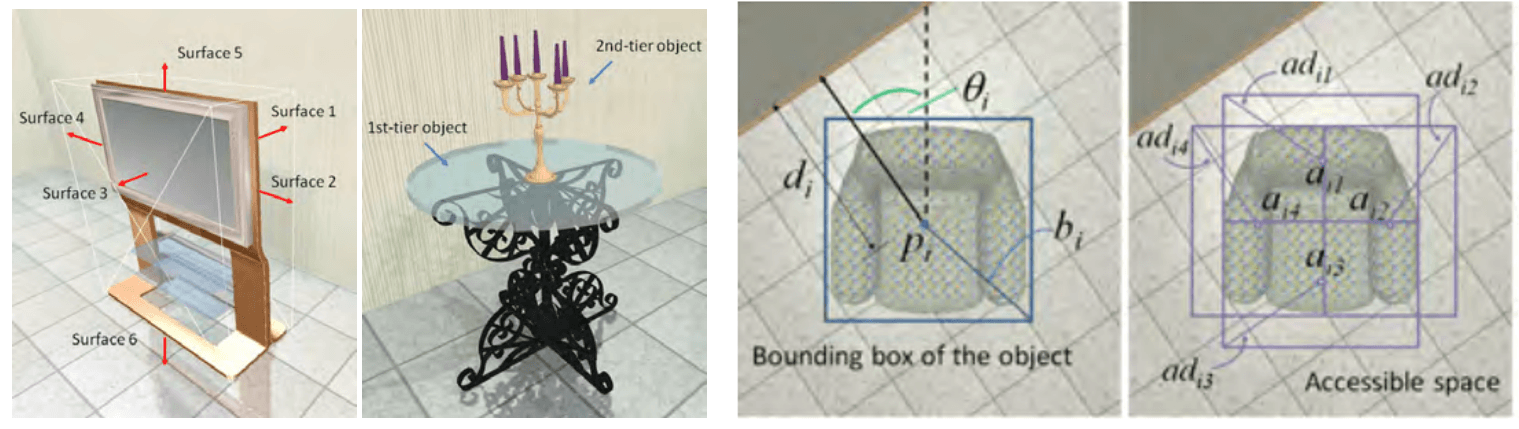

All this being quite theoretical, let’s take an example. One of the use cases where this kind of approach can be interesting is the automatic creation and arrangement of parts. The idea is to be able to arrange a part coherently from a number of objects specified beforehand.

Arranging furniture in a room in a coherent and functional way is a complex task, which has to consider many parameters such as the dependency relationships between different objects, their spatial relationship to the room etc… It is therefore necessary to find a data representation that captures these different relationships.

Data representation

For each object, we assign what is called a bounding box, i.e. a 3-dimensional delimitation of the object. We also assign for each object a center, an orientation, and a direction (for example a sofa with a front and a back side). We also consider for some objects a cone of vision (for example there must be no objects in front of a TV, so we define a cone of vision in front of the TV which must be empty). We also assign other parameters to the objects such as the distance to the nearest wall, the distance to the nearest corner, the height, the necessary space that must be left in front of the object for it to be functional etc… A room is therefore represented by all the parameters of the different objects.

Evaluation function

To know if a part is consistent or not, you need a function that looks at the data representation of a part and returns a score. To know what a satisfactory room is, we use a set of rooms fitted out by hand by a human. We extract relevant characteristics for the placement of the different objects in the room. Thus, we observe the spatial relationships between the different objects. For example, a TV is always against a wall with a sofa in front, a lamp is often placed on a table, access to a door must be unobstructed, etc…

These different criteria are used as a reference in the evaluation function. The evaluation function measures the accessibility, visibility, location and relationships between all objects and looks at whether these values are consistent with those of rooms made by humans. The closer these values are to the example parts the better the score will be.

Research algorithms

The search algorithm used is the Simulated Annealing algorithm which is a very simple algorithm. To start the generation, we tell the algorithm the different objects that we want to put in the room and the search will allow to find a coherent arrangement of these objects. We start with a random arrangement of the objects. At each iteration of the search, a small modification of the room is made. The algorithm allows different layout modifications such as moving an object (rotation and/or translation) and switching the location of two objects.

Then, the new room layout is evaluated by the evaluation function. If the new layout is better than the previous one, it is kept. If, on the other hand, it is worse, it is kept according to a certain probability. This probability of keeping a worse solution is called the temperature and generally decreases with time. Its interest is to avoid ending up in what is called a local optimum, i.e. a good solution but not the best one. The objective at the end of the search is to have found the global optimum and therefore the best solution.

With a fairly long search time, this approach gives very satisfactory results. Other more advanced approaches (such as this one) automatically find the objects to be put in the room.

As said before, this kind of approach is not yet really implemented in commercial games, but we can see right away the interest for environment generation. They have also been used, for example, to create maps in the Starcraft game or in Galactic arm race to automatically find weapon characteristics that appeal to the player.

Other procedural generation approaches can be combined with search methods, such as grammar-based methods. Indeed, since grammars are a representation of content, it is possible to search with an algorithm rather than manually for the rules and grammars best suited to generate its content.

Solver-based methods

Solver-based methods are quite similar to research-based methods since they also involve looking for a solution in a space of possibilities, however the research method is different. Instead of looking for complete solutions that are more and more relevant, these methods first look for partial solutions in order to progressively reduce the space of possibilities to finally find a satisfactory complete solution. Here the search is posed as a problem of constraint satisfaction and the methods of resolution are more specialized (we speak of solvers).

Thus, unlike methods such as the MCTS seen in the previous article which can stop at any time and will always have a solution (probably not optimal but a solution anyway), these approaches cannot be stopped in the research environment since they will then only have a partial solution to the problem.

A simple example of a constraint satisfaction problem is the resolution of a sudoku grid. The constraints are the rules of the game and the space of possibilities corresponds to the set of possible choices for the different boxes. The algorithm will find partial solutions that respect the constraints by filling the grid as it goes along, thus reducing the remaining possibilities. It can happen with this kind of approach to encounter a contradiction where it is no longer possible to advance the resolution while respecting the constraints. If this happens, the research must be restarted.

Usually solvers are advanced enough to try to avoid these problems.

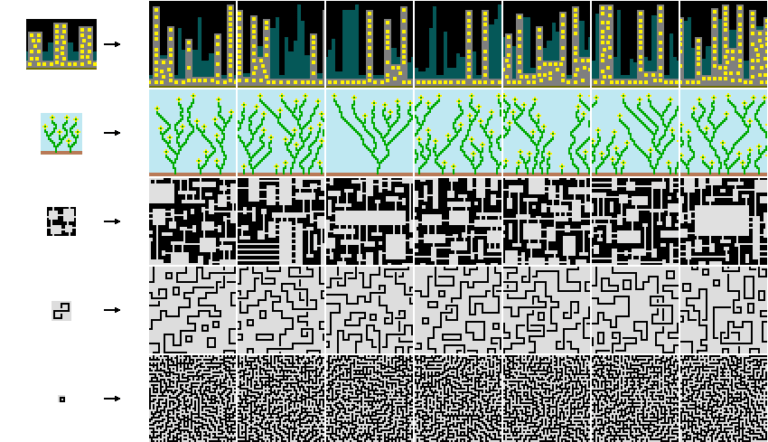

Let’s take an example: the Wave Function Collapse (WFC) algorithm. This approach is really recent, indeed it was proposed in 2016. The approach can be implemented as a constraint satisfaction problem.

The basic idea is to generate a large 2-dimensional image based on a smaller input image. The generated image is constrained to be locally similar to the input image.

The similarity constraints are the following:

Each pattern of a certain size N*N pixel (e.g. 3*3) that is present in the generated image must appear at least once in the base image.

The probability of finding a certain pattern in the generated image should be as similar as possible to its frequency of presence in the initial image.

At the beginning of the generation, the generated image is in a state where each pixel can take any value (color) present in the input image. We can make the analogy with a sudoku grid where at the beginning of the resolution a box can take several values.

The algorithm is as follows:

A zone of size N*N is chosen in the image. In sudoku we start by filling in the boxes where there is only one possible value. Similarly, here the chosen area is the one with the least number of different possibilities. At the beginning of the generation all the zones have the same possibilities since we start from scratch. Therefore a zone is chosen at random to start the algorithm. The chosen zone is replaced by a pattern of the same size present in the initial image. The pattern is chosen according to the frequencies.

The second phase corresponds to a propagation of the information. As a zone has been defined, it is likely that the pixels around this zone are more constrained in terms of possibility. In sudoku, by filling in some boxes, we add constraints on the remaining boxes. Here the principle is the same since the possible patterns are constrained by the basic image.

The algorithm continues by alternating these two phases until all the areas of the generated image are replaced.

Small point of terminology :

In quantum mechanics, an object can be in several states at the same time (cf Schrödinger’s famous cat). This is called superposition of states. This is obviously not possible with objects in classical physics. However, these objects which are in a superposition of states seem to return to only one of the different states when we observe them. It is thus impossible to directly observe the superposition of states. This phenomenon is called wave packet reduction and in English Wave Function Collapse. It is therefore this principle that inspired the designer to develop the algorithm. Indeed, this idea can be found a little bit in the possible values that the different pixels can take, which are reduced as the algorithm progresses.

An interactive version of a simple case of Wave Function Collapse can be found on this website. It is excellent for intuitively understanding how it works and especially how the choice of a cell impacts the possibilities of the surrounding cells.

The github of the WFC algorithm presents a lot of resources and examples of what can be done with this algorithm. Since the algorithm is a constraint satisfaction problem, it is precisely possible to add new constraints (such as the presence of a path for example) to force a different result.

The Wave Function Collapse approach is quite simple to implement and gives very good results. That’s why it has been very quickly implemented in the world of independent video games (for example in Bad North released very recently and in Caves of Qud still in early access).

Machine learning methods

Machine Learning-based procedural generation learns how to model content from previously collected data in order to generate new, similar content. The content data that will be used to learn the model can be created manually or possibly extracted from an existing set for example. In any case, this step of building a dataset is crucial and if the data is not in sufficient quantity and quality the results will most likely be bad.

In search-based approaches, the expected result is defined quite explicitly (through the evaluation function) and a search in the possibility space is carried out to find a solution that corresponds to the required criteria. With Machine Learning, the control over the final result is less explicit since it is the Machine Learning model that will directly generate the content. It is therefore mainly the work on the learning data set that will condition the final result.

In commercial games, this approach is still very little democratized, with developers most often opting for simpler approaches (such as WFC for example). The main reason is probably that it is quite difficult to build a dataset large enough to model the content well. However, research in the field is very active and many new methods are regularly emerging. It is likely that in the coming years, more and more games will rely on these approaches to procedurally generate their universes.

So let’s take a look at some of the interesting possibilities offered by Machine Learning.

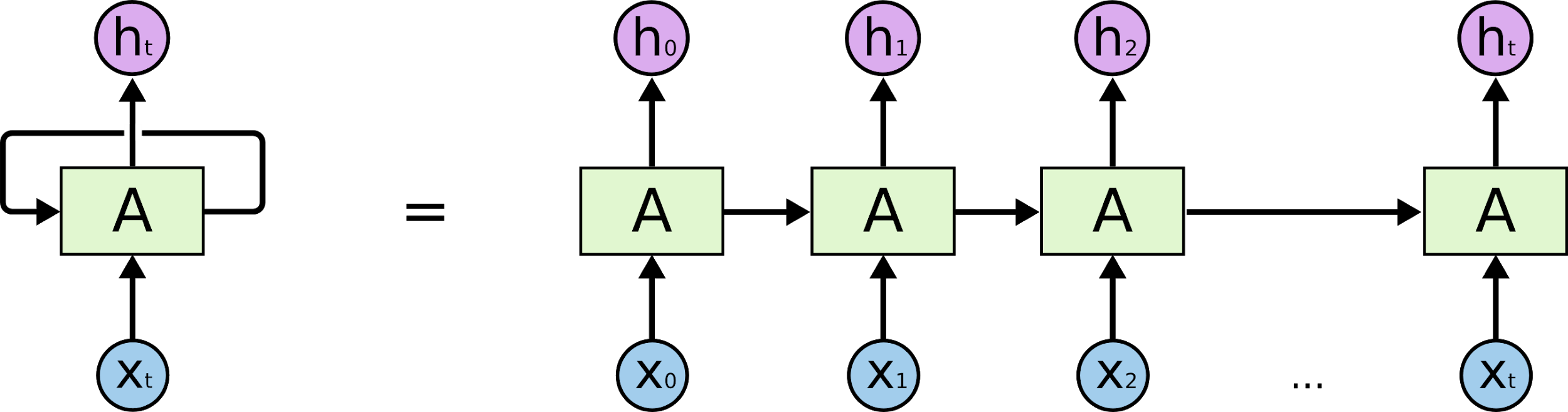

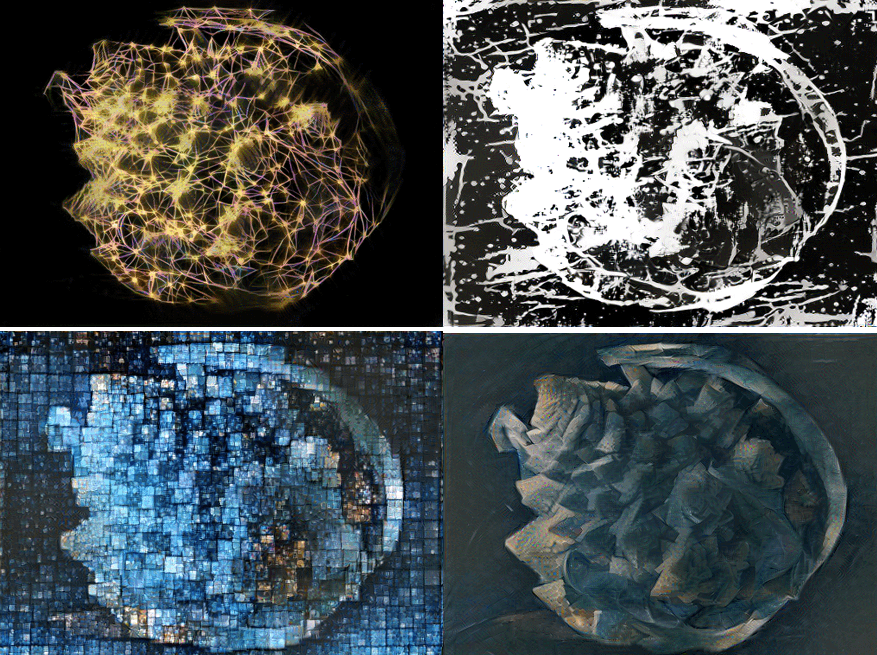

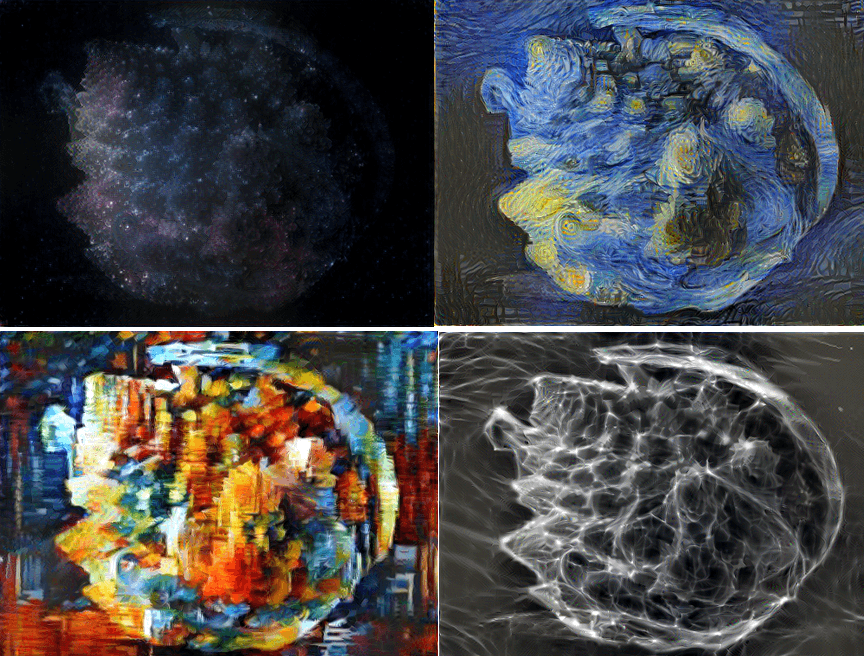

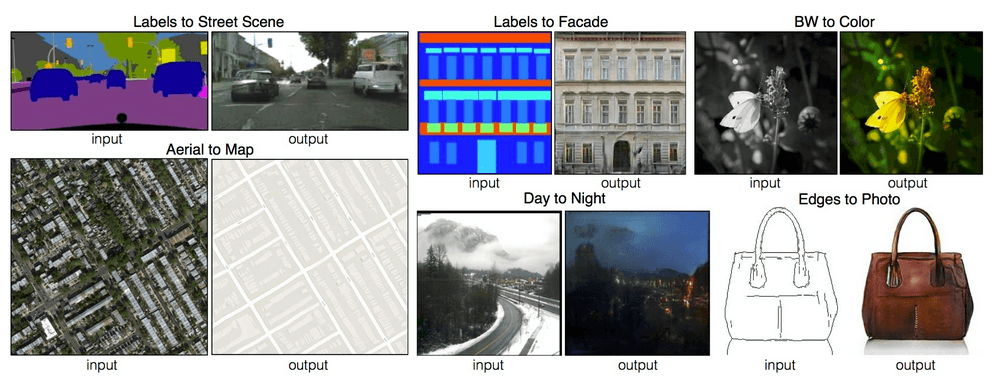

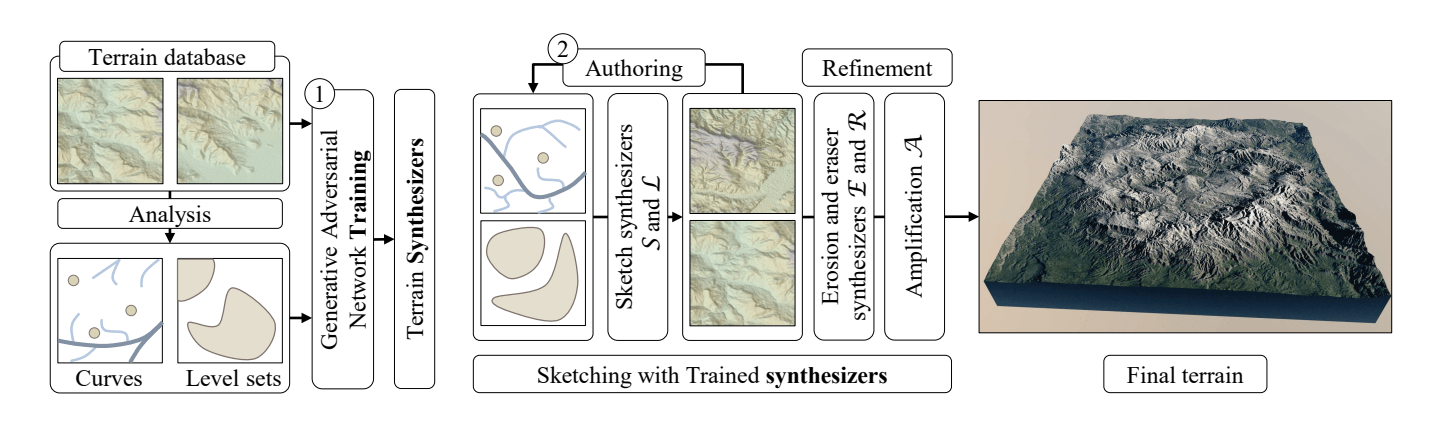

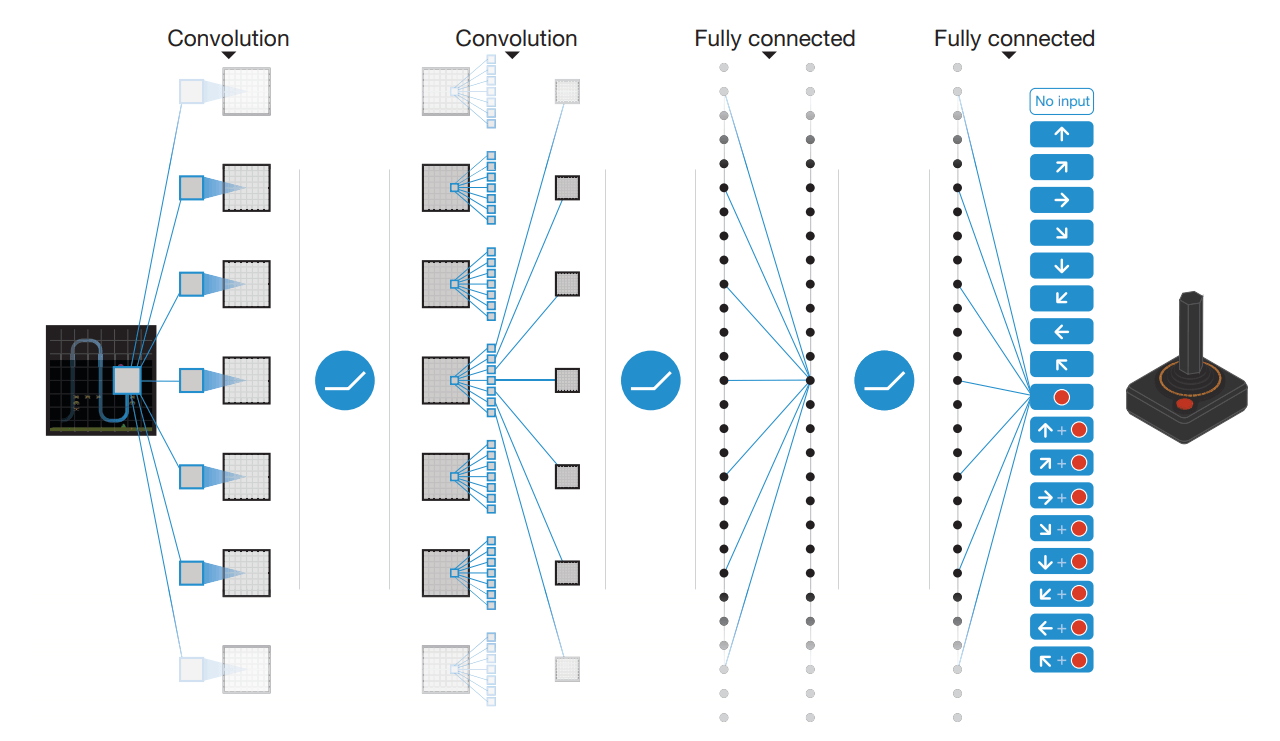

Tiles method with machine learning